The Real Price of AI: Pre-Training Vs. Inference Costs

AI inference costs far outweigh training. Learn why they're rising & how to optimize model deployment, hardware & techniques for sustainable AI value.

Key Takeaways

Here are five key takeaways from the article:

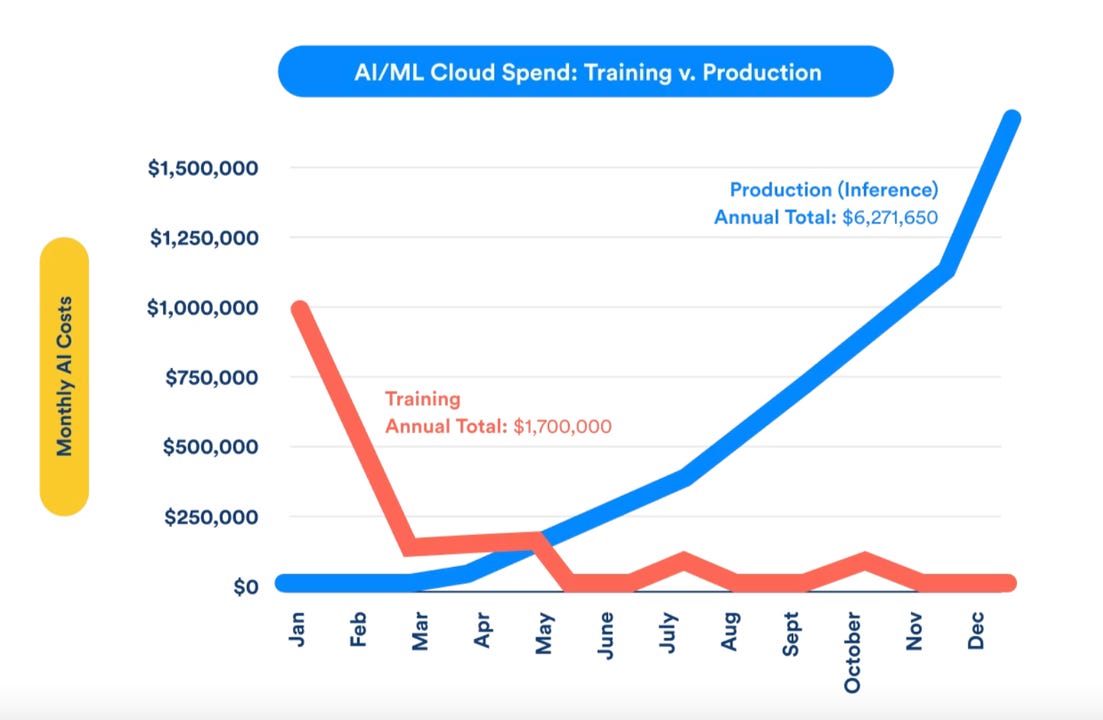

For most companies using AI, the ongoing cost of running models daily (inference) vastly outweighs the initial training cost, potentially accounting for 80-90% of the total lifetime expense.

Overall inference costs are rising significantly due to wider business adoption of AI, the demand for real-time performance, the increasing complexity and size of models, and the growing volume of data processed.

Proactively managing and optimizing inference costs is becoming a critical roadblock to achieving real value and return on investment from generative AI deployments.

Strategies to control inference expenses include choosing the right-sized model for the task, applying optimization techniques like quantization and pruning, making smart hardware choices, and using efficient deployment methods like batching.

Successfully leveraging AI requires a shift towards managing AI systems as continuously operated products, focusing on optimizing both performance and cost efficiency throughout their lifecycle.

In 2023, I started Multimodal, a Generative AI company that helps organizations automate complex, knowledge-based workflows using AI Agents. Check it out here.

We're seeing truly rapid advancements in generative AI. It feels like every week brings new capabilities. A lot of the discussion centers on the huge effort and significant amount of hardware needed for training the big large language models, or LLMs.

But here’s something critical that often gets missed: for almost any company actually putting AI to work, the day-to-day running – the inference stage – tells a very different cost story. Once you have a given model trained, the real cost accumulation begins. Think about 80%, maybe even 90%, of the total dollars spent over a model's active life. This cost is driven by factors like how many users are querying the model, how often, and the volume of input and output tokens processed for each request.

Let’s put it plainly: not planning for and managing this inference cost is becoming a major roadblock to getting real value and ROI from AI. In this article, we’ll talk about inference costs versus pretraining costs, and how to manage them.

Demystifying AI Workloads: Training vs. Inference

To really grasp the cost dynamics, it helps to clearly separate the two main workloads for generative AI models: training and inference. Think of training as the intense, upfront effort and inference as the ongoing operational work.

First, let's talk about training. You can picture training like sending a model to university. It’s an intensive process where the goal is to teach the model patterns, structures, and relevant information from vast amounts of data.

This requires a significant amount of compute power, often using specialized hardware like GPUs or TPUs running for extended periods. It involves crunching through data, adjusting millions or billions of parameters until the model learns effectively. This process results in a large, one-time (or periodic, if retraining) cost factor. It’s where those headlines about massive hardware demands for large language models originate.

Now, compare that to inference, or LLM inference specifically. This is like the graduate actually working their job day-to-day. The goal here isn't learning; it's applying what was learned during training to new, unseen input data. When users interact with a generative AI application, providing input tokens through prompt engineering or other means, the model performs inference to generate output tokens – could be text, code, or analysis.

Each individual inference task might require less compute than the training phase, but here’s the key: it happens constantly, at scale, for potentially thousands or millions of users. This continuous demand, focused on speed and model performance for a good user experience, is what drives the cumulative inference cost. Achieving efficient inference often requires careful tuning of the software infrastructure.

This leads to a significant imbalance. For most companies deploying these models, the ongoing inference cost vastly outweighs the initial training cost. It’s common for inference to account for 80-90% of the total compute dollars spent over a given model's production lifecycle. Why? Simply frequency and scale. The model serves far more requests during its operational life than the number of batches processed during its training. This trend makes understanding and reducing inference costs a critical focus for any company looking to deploy AI sustainably.

Why Inference Costs are Skyrocketing

It might seem counterintuitive. We constantly hear about rapid advancements making things cheaper, maybe even hinting at a Moore's Law equivalent for AI cost efficiency. LLM providers often slash the price per million tokens processed.

Yet, for many organizations, the total bill for running AI models—the inference cost—keeps climbing. This isn't a contradiction; it's the result of several powerful trends converging. Let's break down the key factors driving this demand and pushing up the overall dollars spent on inference.

Explosion in AI Deployment and Usage

The single biggest factor is simply that generative AI is going mainstream within businesses. It's moving rapidly from isolated experiments to being woven into core operations across almost every industry.

Recent data highlights this adoption curve; for instance, figures cited by TechRadar Pro based on IBM research indicated that around 42% of large companies were already actively using AI, with another 40% actively exploring it. This wider deployment means more models are running across more business functions—customer service, marketing, software development, internal analysis, you name it.

Naturally, more active models serving a larger number of users translates directly into a higher volume of inference calls. Each time an employee uses an AI assistant or a customer interacts with an AI-powered feature, an inference request is made. As usage scales, the aggregate compute demand balloons, driving up the total inference cost for the company, even if the cost per individual interaction is relatively low. This widespread adoption is fundamentally reshaping the market demand for inference capacity.

The Need for Speed: Real-Time Performance

Many of the most valuable AI applications demand immediate results. Think about conversational AI agents, real-time fraud detection systems, or dynamic recommendation engines. Users expect instant responses; lag kills the experience and diminishes the value. Achieving this low latency, or minimizing inference time, often requires significant investment in high-performance hardware infrastructure.

As discussed in a previous article comparing AI hardware, achieving top-tier speed for complex models often means relying on specialized, powerful accelerators. This includes NVIDIA's high-end GPUs like the H100 or H200, Google's TPUs (especially inference-focused versions like TPU v5e), or AWS's purpose-built Inferentia chips (like Inferentia2).

These hardware options deliver excellent performance but come at a premium price compared to general-purpose CPUs or older GPUs. There's an inherent trade-off: faster speed often means higher infrastructure cost per instance, which directly impacts the inference cost per query. Companies must balance the desired model performance against the budget, making hardware selection a critical factor in managing the final bill from cloud providers like AWS, Google Cloud, or Microsoft Azure.

The Complexity Factor: Bigger Models and Deeper Reasoning

Another major driver is the increasing complexity of the models themselves. The state-of-the-art large language models that capture headlines often boast hundreds of billions, or even trillions, of parameters. This sheer model size inherently requires more computation for each inference task compared to smaller models. Processing the input tokens and generating the required output token sequence for these massive proprietary models or even large open models is computationally intensive.

Furthermore, we're moving beyond simple pattern recognition or classification towards models capable of sophisticated reasoning. These AI systems are designed to analyze complex situations, break down problems, potentially query external data sources like a vector database for relevant information, evaluate options, and create multi-step plans or detailed analyses. This reasoning process is far more computationally demanding than, say, simple image classification.

Consider this example: having an AI classify a picture requires one type of inference process. But asking an AI agent to analyze a detailed customer complaint, understand the context, retrieve purchase history, identify relevant policy clauses, compare potential solutions, and then generate a personalized, multi-paragraph resolution plan involves a far more complex sequence.

Such tasks might even involve the AI agent making multiple internal inference calls to reflect, plan, and refine its output before presenting the final response. This multi-step reasoning significantly multiplies the compute demand—and thus the inference cost—for a single user interaction, making these advanced capabilities significantly more expensive to run at scale.

The Unrelenting Data Deluge

Finally, the ever-increasing volume of data being generated and consumed plays a role. More data often translates to more input being fed into models for inference, whether it's analyzing larger documents, processing more customer interactions, or monitoring real-time data streams from sensors or financial markets. This constant flow of input data keeps the inference engines busy, contributing to the overall workload and cost.

While optimizations like model quantization and hardware improvements work towards reducing inference costs on a per-unit basis, the combined effect of wider adoption, the demand for real-time speed, the trend towards larger and more complex reasoning models, and growing data volumes is causing the total inference spend for many organizations to rise significantly.

The AI inference market's projected growth reflects this reality. This makes understanding, measuring, and actively managing inference cost not just a technical challenge, but a critical business imperative for any company looking to achieve sustainable and efficient AI deployment at scale.

Strategies for Taming Inference Costs

Understanding that inference is the dominant cost factor is the first step. The next, crucial step is actively managing and reducing these costs. Thankfully, there are several effective strategies companies can employ, ranging from model selection to deployment tactics and infrastructure choices. Implementing these can significantly improve the ROI and sustainability of your generative AI initiatives.

Right-Sizing Your Models

It's tempting to always reach for the largest, most powerful large language models available, whether proprietary models or open models. However, this is often overkill and unnecessarily expensive for many tasks. A core principle of reducing inference costs is model right-sizing.

Avoid the Sledgehammer: Don't use a massive, multi-billion parameter model for a task that a much smaller model could handle effectively. Analyze the specific requirements of the application. Does it truly need the nuanced generation capabilities of a giant LLM, or would a task-specific, smaller model suffice for classification, extraction, or simpler Q&A?

Explore Task-Specific Options: Often, smaller models trained or fine-tuned for a specific industry or function can deliver excellent performance at a fraction of the inference cost. Sometimes, a "good enough" model performance achieves the business goal much more profitably. Compare the capabilities needed versus the price of running different models.

Optimize, Optimize, Optimize

Once you've selected a model (or models), significant cost savings can often be achieved through optimization techniques before deployment. These methods aim to make the given model run more efficiently on the hardware, reducing inference time and resource consumption. Key techniques include:

Quantization: Think of this as reducing the numerical precision of the model's parameters (weights). Instead of using highly precise numbers (like 32-bit floats), quantization might use less precise formats (like 16-bit floats or 8-bit integers). This makes the model smaller and faster to run, often with minimal impact on accuracy for many tasks. It's like using slightly less precise measurements that are much quicker but still accurate enough for the job.

Pruning: This involves identifying and removing redundant or less important connections (parameters) within the neural network, effectively making the model smaller and leaner without significantly degrading its performance. Imagine carefully trimming unnecessary branches from a tree to make it lighter.

Distillation: Here, you train a smaller, more compact model (the "student") to mimic the behavior and output of a larger, more complex model (the "teacher"). The goal is to transfer the knowledge to a more efficient model that's cheaper to run for inference.

Smart Infrastructure Choices

The hardware and cloud infrastructure you use for inference have a massive impact on both speed and cost. Making informed choices here is critical.

Dedicated Inference Hardware: Specialized chips are designed explicitly for efficient inference. Options like AWS Inferentia2, Google's TPU v5e, and NVIDIA's GPUs (including powerhouses like the H100 and H200, though sometimes overkill if not needed for speed) can offer significantly better performance per watt and performance per dollar compared to general-purpose CPUs for AI workloads. Selecting the right instance type is key.

Leverage Cloud Provider Services: Major cloud providers (AWS, Google Cloud, Microsoft Azure) offer managed inference services (e.g., SageMaker Inference, Vertex AI Prediction, Azure ML Endpoints). These services often handle scaling, provide optimized software environments, and sometimes integrate model optimization tools, simplifying deployment and potentially lowering costs.

Dynamic/Serverless Inference: For workloads with variable demand, serverless inference platforms can be highly cost-effective. You pay primarily for the compute time consumed during active inference calls, rather than paying for idle instances.

Consider Edge Computing: For applications requiring very low latency or processing sensitive data, running inference directly on edge devices (closer to where data is generated) can sometimes reduce costs associated with data transfer and reliance on centralized cloud infrastructure.

Efficient Deployment Strategies

How you serve inference requests also matters. Simple adjustments can yield efficiency gains.

Batching: Instead of processing inference requests one by one as they arrive, batching involves grouping multiple requests together and processing them simultaneously. This often improves hardware utilization (especially on GPUs/TPUs) and can significantly increase throughput, lowering the cost per inference.

Caching: For applications where identical input prompts are common, implementing a cache to store and quickly return previous results can avoid redundant model computations, saving both time and cost.

Continuous Monitoring and Cost Tracking

Finally, managing inference cost isn't a one-time setup; it requires ongoing attention.

Implement MLOps: Robust Machine Learning Operations (MLOps) practices are essential. This includes setting up monitoring to track not just model performance and latency, but also the actual inference cost per prediction, per user, or per transaction.

Regular Review: Continuously analyze usage patterns and cost data. Are certain features driving disproportionate costs? Can models be further optimized or right-sized based on real-world performance? This data-driven approach allows for ongoing optimization to keep inference spending aligned with business value.

I also host an AI podcast and content series called “Pioneers.” This series takes you on an enthralling journey into the minds of AI visionaries, founders, and CEOs who are at the forefront of innovation through AI in their organizations.

To learn more, please visit Pioneers on Beehiiv.

Wrapping Up

As we've explored, the narrative around AI expenses needs a critical update. While training large language models grabs headlines, the persistent, operational cost of LLM inference is rapidly becoming the dominant factor in the total cost of ownership for most companies. Driven by wider adoption, real-time demands, and increasingly complex models, this inference cost demands strategic focus.

This requires a fundamental mindset shift: moving away from viewing AI implementation as a one-off project and towards managing AI systems as products that require continuous operation and optimization for both performance and cost efficiency. Success isn't just deploying a model; it's running it sustainably at scale.

Here’s what I recommend as you navigate this landscape:

Scrutinize Your Initiatives: Review current and planned AI deployments specifically through the lens of projected inference cost. Don't let it be an afterthought.

Integrate Planning: Foster collaboration between your tech and finance teams to create realistic cost models that cover the entire AI lifecycle, emphasizing operational spending.

Prioritize Optimization: Make reducing inference costs—through model right-sizing, optimization techniques like quantization, smart hardware and software infrastructure choices—a core competency within your AI practice.

Ultimately, preparing for and actively managing inference costs isn't just about controlling spending. It's a strategic enabler, crucial for scaling your AI efforts effectively and ensuring you achieve lasting, profitable value from these powerful technologies.

I’ll see you next week with more insights on building and deploying enterprise AI.

Until then,

Ankur.

Thank you, i have to explain this everyday almost. Now, I am just gonna link your post!