NLP in Action: Entity Resolution

A walkthrough of traditional forms of entity resolution such as Regex matching, fuzzywuzzy, and TF-IDF. See how they shape up against each other with their respective performances on a single dataset.

This is the first issue of our series, NLP in Action. This series highlights the good and the bad of common methods of natural language processing. In doing so, we hope to spark conversation and curiosity in the world of NLP.

This post is sponsored by Multimodal, a NYC-based development shop that focuses on building custom natural language processing solutions for product teams using large language models (LLMs).

With Multimodal, you will reduce your time-to-market for introducing NLP in your product. Projects take as little as 3 months from start to finish and cost less than 50% of newly formed NLP teams, without any of the hassle. Contact them to learn more.

Computers have made working with numerical data a breeze. Anyone with internet access can perform complex functions or create a graph simply by selecting a table of numbers.

This is not the same for textual data. Analyzing text in the past was only known to a small group of people, but natural language processing (NLP) is democratizing textual analysis.

Entity resolution (ER), or the disambiguation of records referring to the same entity, is an issue many analysts or developers run into. To better understand ER, here is Senzing CEO Jeff Jones:

NLP methods such as Regex matching, fuzzywuzzy, and TF-IDF are popular solutions for entity resolution. Let’s take a look at these and determine their benefits, drawbacks, tradeoffs, and more. Run times, the presence of patterns in data, and coding knowledge are a short list of factors that will help users determine which traditional entity resolution method works best for them.

Regex Matching

What is Regex matching?

Regex matching, or regular expression matching, is a programming method used to find or match a set of strings to another. The Python module, re, supports regular expression operations and is a popular method of string parsing. Regex matching can match special characters or a set of characters. For re’s full documentation, click here.

Regex matching searches for the presence of a unique sequence of characters in a given string. This functionality is useful in working with textual data, which is exemplified below.

Before we delve deeper, let’s familiarize ourselves with the dataset we are working with. This dataset was utilized in the VLDB 2010 paper1, a research publication article. There are a total of 2224 matches in the entire set with 4908 records. The first five fields were concatenated into one string. Here is one instance of the data:

“304586 The WASA2 object-oriented workflow management system Gottfried Vossen, Mathias Weske International Conference on Management of Data 1999”

That’s it - one, long string.

The methods will be evaluated by f1 and accuracy performance scores as well as counts of false negatives, false positives, and incorrect matches.

f1 score - weighted average of precision, the ratio of correctly predicted positive observations to total positive predictions, and recall, the ratio of correctly predicted positive observations to total positive observations

accuracy - the ratio of correctly predicted observations to total observations

false negative - when predicted class is false but actual class is true

false positive - when predicted class is true but actual class is false

incorrect matches - when the predicted match is not an actual match

The full notebook of this exercise is linked at the bottom of the article. Now - let’s get started with some NLP magic.

Regex Matching Example

Functions created:

clean_text(): removes special characters like colons, dashes, and double spaces that are replaced with a single space. This limits the ambiguity that might occur from typical typographical differences between strings (i.e.” object-oriented workflow” and “object oriented workflow”).

create_partial_title(): returns a lowercase partial title from the first n words.

find_duplicate_indices(): returns an array of indices of possible duplicates. Checks lowercase partial title against row then checks for the same publishing date. If these conditions are met, then the possible duplicate is added to the array.

After the data is cleaned, a partial title is created from the string given the first specified amount of words. This method was tested in three different rounds using the first 2, 3, and 4 words. In each of these rounds, the top-rated duplicate and its index is matched to the record.

Here are the results:

2 words in partial title:

Total matches: 3954

Matches remaining: 956

3 words in partial title:

Total matches: 4170

Matches remaining: 956

4 words in partial title:

Total matches: 4266

Matches remaining: 644

The total elapsed time is 1 minute 29 seconds.

As expected, the number of matches increased as the number of words in the partial title increased. Although a longer title length may capture more matches, increasing the number of words in the title incurs a higher chance of false-negative matches. A user must define which is more important to have less of: false negatives or false positives. Finding the right balance between these two is like walking a tightrope.

Regex matching is highly intuitive when working along the lines of traditional matching and gives the user some wiggle room in specifying their matches. This method can be time-consuming despite a short run time, as it requires iterative testing to achieve the required number of matches.

Pattern matching is also only applicable on datasets with identifiable patterns, so this approach would not work with unstructured data. Using this method requires manual work to build initially and then even more work to maintain. Furthermore, even in datasets with these identifiable patterns, the unique markers indicative of a match can vary drastically; thus, pattern matching is not instantly generalizable and requires user refinement from dataset to dataset.

Evaluation of Regex Matching

f1 score: 0.93

accuracy: 0.94

false negatives: 234

false positives: 52

incorrect matches: 12

Below is an image of the first ten records from the dataset and their respective top duplicates using Regex matching.

An f1 score and accuracy of 93% and 94% respectively are positive indicators of Regex matching’s performance on this dataset. Approximately 11%, 234 false negatives out of 2224 total matches, of actual matches were disregarded by the algorithm. Only 52 duplicates, or about 2%, of total matches were labeled incorrectly.

Regex matching performed well on the dataset: high f1 score and accuracy score, low number of false positives, and a higher number of false negatives. Will it perform better or worse than fuzzywuzzy or TF-IDF? Continue reading to find out.

Fuzzywuzzy Library

What is the fuzzywuzzy library?

Fuzzywuzzy is an open-source Python library used for string matching given a specific pattern, similar to the pattern matching and regex method. The library uses Levenshtein Distance to calculate differences between sequences within the string.

Fuzzywuzzy Example

Utilizing fuzzywuzzy’s token ratio score of 0 to 100, the closest match and its index are paired to each record. After 200 iterations and 24 minutes of runtime, a new dataset resulted with the first 200 records duplicate index, duplicate text, and the confidence score of the match.

The application of fuzzywuzzy is fairly easy. There is little or no need for data cleaning as the library is resilient to typographical differences within duplicates. By providing a confidence score, the user can be more sure of matches if used in conjunction with another entity resolution approach.

While fuzzywuzzy is undoubtedly one of the easier methods, it does have some limitations. Long runtimes and limited user control over what constitutes a match make this technique almost impossible to use on large datasets. Given methods may support beginners in entity resolution, but experienced developers may find these methods restrictive when faced with complications.

Evaluation of Fuzzywuzzy

Here is an image of the top ten records from the dataset. Note how each duplicate match is given a confidence score.

Just within the first ten records, confidence scores are above 84%. What often causes lower confidence scores are differences in uppercase or lowercase, extra spaces, and special characters.

Because the first 200 records took close to 25 minutes of runtime, evaluating this entire dataset along with overall performance scores and false positives, false negatives, and incorrect matches would take too much time. Therefore, fuzzywuzzy will not be evaluated in the same way as Regex matching and the next example, TF-IDF.

When working with larger datasets, you may want to consider other scalable entity resolution methods or just make sure you have enough time in the day if you want to use fuzzywuzzy.

TF-IDF Vectorizer

What is TF-IDF Vectorizer?

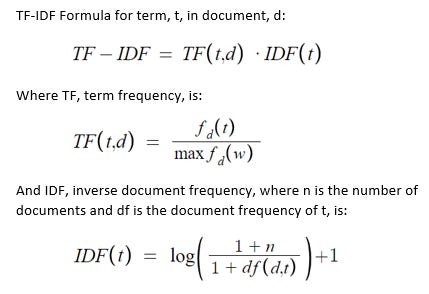

TF-IDF, or Term Frequency-Inverse Document Frequency, is a sophisticated entity resolution method that evaluates how similar records are to one another. This common algorithm converts text into a meaningful representation of numbers. This statistical measure is calculated by multiplying two numbers:

Term frequency - number of times a word appears in a document

Inverse document frequency - number of documents the word appears in

A high score indicates that a word is more relevant in the document. Common words typically have very low TF-IDF scores within a dataset. This article explains TF-IDF more in-depth.

TF-IDF Vectorizer tokenizes documents while learning the vocabulary of the dataset. Compared to its counterpart, CountVectorizer, TF-IDF is the preferred method because it calculates the importance of words in the document compared to just the frequency of words. Common words that are statistically less important can then be removed resulting in less noise in your model.

Let’s put TF-IDF Vectorizer to the test.

TF-IDF Vectorizer Example

Functions used:

awesome_cossim_topn(): returns matrix after performing sparse matrix multiplication followed by top-n multiplication

get_matches_df(): returns dataframe with matches and confidence scores

TF-IDF has an extremely quick run time (under 5 seconds in our example). Like fuzzywuzzy, it is also resilient to typographical differences between duplicates. TF-IDF is a solid method for entity resolution that provides fast machine learning support. Compared to other methods such as pattern matching or fuzzywuzzy, TF-IDF Vectorizer is the most suitable method on larger datasets.

TF-IDF is the most code-intensive of the three methods and requires some understanding of supporting functions such as sparse matrices.

Since TF-IDF is built off of the bag-of-words model, TF-IDF falls short in recognizing semantic similarities between words. Semantic similarity measures how similar words’ meanings are. Instead, TF-IDF uses lexical similarity that only measures words’ similarities with their actual letters.

For entity resolution’s sake, lexical similarity obviously matters a greater deal than semantic similarities. TF-IDF worked well on a smaller set of structured data. But with larger, more complex, and unstructured datasets, a tool that understands semantic similarities would greatly support the use of TF-IDF where lexical similarity falls short.

Evaluation of TF-IDF

f1 score: 0.90

accuracy: 0.90

false negatives: 51

false positives: 191

incorrect matches: 258

Here’s a snapshot of the first ten records and their duplicates in the dataset. Notice the confidence scores given, similar to fuzzywuzzy’s confidence scores.

The TF-IDF method has a slightly lower f1 score and accuracy of both 90% compared to Regex matching’s performance scores. Realistically, an accuracy score of 90% is an extremely strong model, so don’t dismiss TF-IDF because it scored a bit less than Regex matching.

TF-IDF also has a much lower number of false negatives: 51 false negatives compared to Regex matching’s 234. Despite the lower number of false negatives, TF-IDF does have a higher number of false positives than Regex matching.

So, which is the “better mistake” to make in this example: false negative or false positive?

A false negative, in this case, means predicting that another record is not a duplicate when in fact, it is. A false positive means that predicting another record is a duplicate when in fact, it is not.

When performing entity resolution, you would want your model to identify as many duplicates as possible and then evaluate after. Therefore, allowing a higher number of false positives with checking afterward would provide the best results.

If you chose to pick a method with more false negatives, like Regex matching, your model is geared towards missing possible matches. TF-IDF’s higher number of false positives and ability to be scaled quickly on large datasets makes it the top entity resolution method evaluated.

Simple and accurate textual analysis is far from the simplicity and accuracy of working with numerical data. However, machine learning methods are providing tools to help developers and eventually any person analyze textual data using natural language processing. This is evident in entity resolution methods such as Regex matching, fuzzywuzzy, and TF-IDF.

Click here to see our entire Google Colab notebook with detailed documentation, functions, and outputs of each method.

Subscribe to get full access to the newsletter and website. Never miss an update on major trends in AI and startups.

Here is a bit more about my experience in this space and the two books I’ve written on unsupervised learning and natural language processing.

You can also follow me on Twitter.

Köpcke, H.; Thor, A.; Rahm, E. Evaluation of entity resolution approaches on real-world match problems Proc. 36th Intl. Conference on Very Large Databases (VLDB) / Proceedings of the VLDB Endowment 3(1), 2010. 2010-09.