The Future Of DALL-E 2 And What It Means For The Graphic Design Industry

OpenAI's image generator is now available to the public, and companies are rushing to produce illustrations at a bargain price. Is this the end for graphic designers?

Key Takeaways

DALL-E 2, the second version of OpenAI's text-to-image generator, was released to the public in September 2022 and caused uproar from graphic designers.

While DALL-E was mainly based on GPT-3, DALL-E 2 is based on contrastive and diffusion text-to-image models called CLIP and unCLIP. These models enable DALL-E 2 to produce more accurate images with high resolution.

DALL-E 2 is equipped with two new features, inpainting and outpainting, which allow users to edit the inside and outside of an existing image.

Although DALL-E 2 is probably the most popular AI art generator on the market, the industry is rapidly growing. Stable Diffusion and Midjourney are currently DALL-E 2's biggest competitors.

As producing art becomes more accessible than ever, graphic designers may be forced to reduce their prices to stay competitive.

AI image generators are trained on existing images, which enables them to mimic a particular artistic style—or the style of a particular artist. This causes problems for artists as users can now imitate their work without their consent.

While some graphic designers may lose their jobs to AI, most designers are likely to use AI as a tool to enhance their work.

This post is sponsored by Multimodal, a NYC-based development shop that focuses on building custom natural language processing solutions for product teams using large language models (LLMs).

With Multimodal, you will reduce your time-to-market for introducing NLP in your product. Projects take as little as 3 months from start to finish and cost less than 50% of newly formed NLP teams, without any of the hassle. Contact them to learn more.

The last time we wrote about DALL-E 2, the new-and-improved version of OpenAI’s image generator had not yet been released to the public. We could only speculate on how it would differ from its predecessor, DALL-E, based on what OpenAI shared with us at the time.

Since then, DALL-E 2 was first made available in beta to invited users in July and finally to all users in September—and it took the world by storm.

Companies are already using DALL-E 2 to visualize products, generate unique digital assets like logos and thumbnails, and create innovative marketing campaigns. Cosmopolitan even used DALL-E 2 to create "the world's first artificially intelligent magazine cover."

Unsurprisingly, it didn’t take long for graphic designers and other visual artists to start voicing their concerns. Some questioned their job security, while others openly criticized those who decide to use AI instead of hiring real artists.

But do artists have real reasons for concern? Could DALL-E 2 really replace them or force them to lower their prices? Let’s explore these questions together, first by reviewing what's new with DALL-E 2.

DALL-E 2 Overview: The New, The Old, And The Improved

OpenAI's initial text-to-image AI system, DALL-E, was amazing in theory—but much less so in practice. While users were blown away by its many possibilities, they weren't exactly happy with its execution.

Images had low resolution, lacked details, and often failed to accurately capture user text prompts. Generating images also took quite a lot of time, which made the AI system unsuitable for more impatient users.

These issues are mainly resolved in the second version of the AI model.

The text-to-image generation is now faster and more accurate, largely because DALL-E 2 is no longer based on GPT-3 like its predecessor, but on contrastive and diffusion models called CLIP and unCLIP. We discussed how these models work in more depth in a previous issue.

But, in short, CLIP and unCLIP enable DALL-E 2 to better understand and interpret natural-language commands and generate high-resolution images that depict those commands more accurately:

According to OpenAI’s study, users can easily tell the difference between images generated by each model—and they prefer images generated by DALL-E 2:

88.8% of study participants said they preferred DALL-E 2 over DALL-E for its photorealism.

71.7% of participants also preferred DALL-E 2 over DALL-E for its caption matching.

Such positive user feedback was enough to cause a stir in the visual art circles. But the new AI model was now also equipped with two additional features, inpainting and outpainting, that would cause further concern.

Inpainting allows users to edit and retouch existing images—both images uploaded by users and those generated by DALL-E 2—using natural-language commands. Let us show you how it works.

We first used DALL-E 2 to generate a new image. We then erased the part of that image that we wanted to edit:

After that, we gave the AI system another prompt and a corresponding image was automatically added to the original image:

(It’s worth noting that only two variations actually contained a “bear scientist with glasses.” So, the inpainting feature may still require some improvements.)

We covered inpainting in-depth in a previous post; inpainting was released in early 2022.

But outpainting was announced in August 2022, just a month before OpenAI would release DALL-E 2 to the public, so we had not tested it when we last wrote about DALL-E 2. Let's test it together now.

A Deeper Dive Into DALL-E 2 Outpainting Feature

As opposed to inpainting, outpainting lets users edit the "outside" of existing images.

It allows them to add new visual elements to the exterior of an image in order to extend it beyond its original borders. That way, users can add more or new context to existing images while preserving the original artistic style.

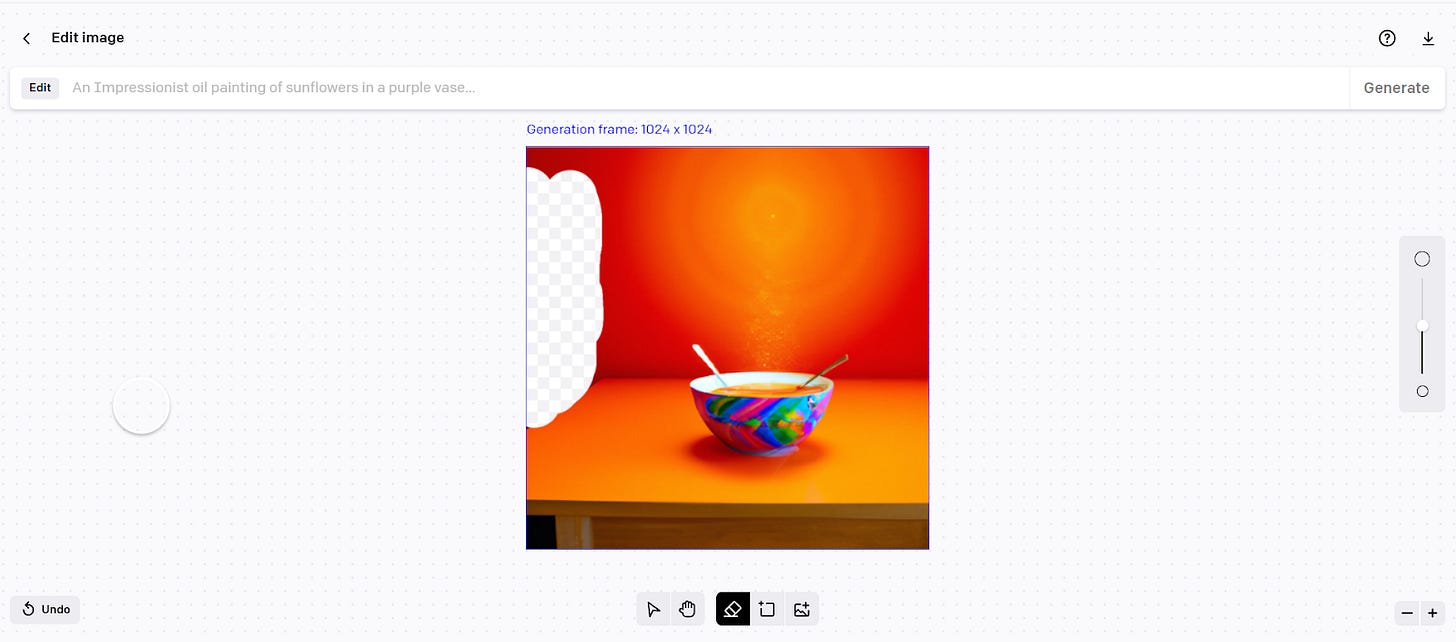

To use this feature, users first need to select or upload an image they want to edit. After that, they need to add a 1,024-pixel by 1,024-pixel generation frame in which they'll extend the image they selected:

The generation frame is where new visual elements will appear. If the original image is too big—bigger than 1,024-pixel by 1,024-pixel—users will first have to resize it in order to extend it.

Once the image is inside the frame, users can fill the frame with new, AI-generated images. To do so, they simply need to give the AI system a new text prompt. For example, this is the result when we entered the prompt "an old oil painting with rolling hills:"

As you can see, the background of this image is now extended thanks to new visual elements added beyond its "edges." You can also notice that new visuals fit the original style and colors, which makes them look as if they were always a part of the original image.

One thing to note is that DALL-E 2 can sometimes be too literal in its interpretations of text prompts:

Although there is still room for improvement, outpainting already works really well. It’s no surprise that visual artists, especially graphic designers, are concerned about how it will affect them.

On that note, we should also keep in mind that DALL-E 2 is not the only AI image generator on the market. There are many others, and some are evolving just as fast.

Before we dive into how these AI models will affect graphic designers, let’s quickly introduce two of DALL-E 2’s biggest competitors: Midjourney and Stable Diffusion.

How Does DALL-E 2 Compare To its Competitors?

DALL-E 2 is arguably the most popular AI art generator on the market, but that doesn’t mean there aren’t many others that may work just as well (or eventually even better than DALL-E 2 itself).

Perhaps the reason why DALL-E 2 is so popular is that it was released long before its current biggest competitors. While DALL-E was first released in January 2021, Midjourney and Stable Diffusion were released in beta only earlier this year, when the excitement about AI image generators had already dissipated.

Still, both Midjourney and Stable Diffusion are getting rave reviews from users—possibly because they learned a few lessons from DALL-E’s initial release.

How do these AI systems compare to DALL-E 2? Do they lack some of its features, or perhaps offer even more options to users? Let’s find out.

DALL-E 2 vs. Midjourney

Let’s start with a quick walk-through of how Midjourney works.

This AI system requires users to join a dedicated Discord server in order to generate images. Once they join, users can enter their prompts by typing in "/imagine" in one of the #newbies channels.

This command will create a black box where users can write their commands:

Like DALL-E 2, Midjourney will create four different images based on one prompt:

From there, users can upscale any of the four images by clicking U1 - U4 or create variations of each of the four images by selecting V1 - V4. And if they don't like any of the images, they can easily create new ones based on the same prompt by clicking the refresh button.

How Does Midjourney Differ From DALL-E 2?

First, all images generated through Midjourney are public. Everyone in the chat room can see the images that other users are generating in real time. This is an obvious drawback for those who want to use images for commercial purposes, but also for anyone who just wants more privacy.

But the fact that all images are displayed as they are being generated can cause other difficulties, too.

For example, if users aren’t paying attention to their chat rooms non-stop, they may not immediately notice that their images are ready. To find them later, they’ll potentially have to sift through dozens of images that other users have generated in the meantime.

Another thing to mention is that Midjourney lacks more advanced features of DALL-E 2. Users are only able to generate images, not edit them or expand them.

But Midjourney seems to be a better choice than DALL-E 2 for creating more artsy and detailed images. We think Midjourney is more creative than DALL-E 2.

Some even compare Midjourney's style to that of fantasy and science fiction genres. Midjourney may be a better fit for creative industries like gaming, film, music, and publishing.

DALL-E 2 vs. Stable Diffusion

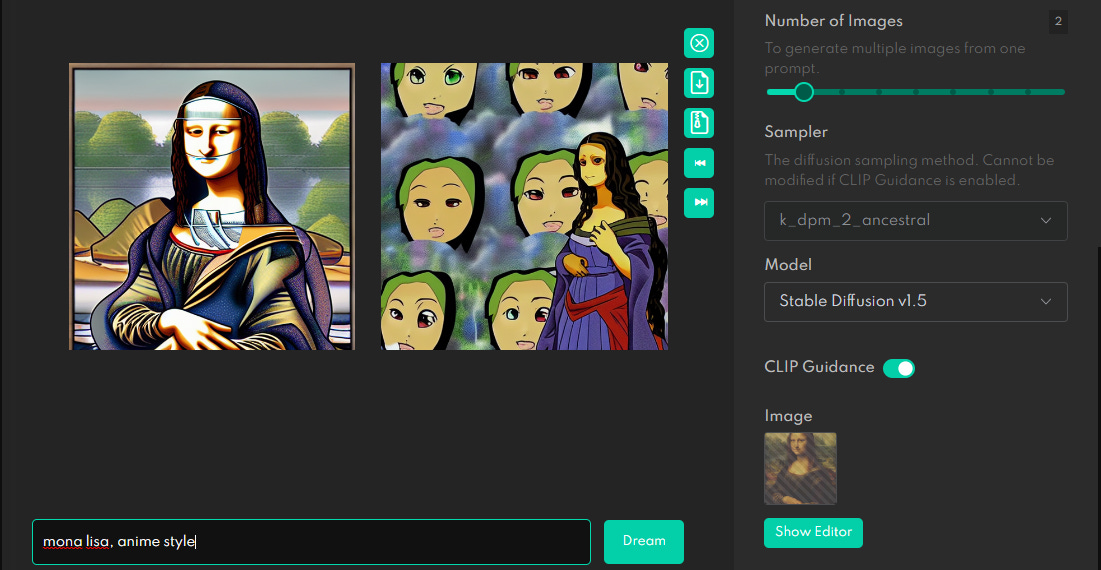

Stable Diffusion is an AI image generator that can be run both locally and online. We’ll use its online version, DreamStudio, to test how it works.

Like DALL-E 2 and Midjourney, DreamStudio generates images based on text prompts. But it also lets users select the width, height, and number of images they want to generate from one prompt:

DreamStudio also allows users to “inpaint” and “outpaint” images, so we tested how its outpainting feature compares to that of DALL-E 2.

We uploaded the same image we used to test DALL-E 2, Da Vinci's Mona Lisa, to DreamStudio’s editor. We also gave DreamStudio the same prompt ("an old painting with rolling hills").

Here's what it came up with based on our entries:

There's an obvious distinction between the original and generated images. The transition is nowhere near as seamless as in the image we edited with DALL-E 2. Here's that image from DALL-E 2 again:

It should be noted that different AI systems may require different prompts to deliver the best results. The example above doesn't necessarily prove that DALL-E 2 works better than DreamStudio. It may only suggest that DALL-E 2 was more successful at interpreting this specific prompt.

Many users say that DreamStudio doesn't work well with abstract or more general prompts. That suggests that DALL-E 2 is better at interpreting natural language, especially when it comes to vague text prompts.

But Stable Diffusion/DreamStudio does have some advantages over DALL-E 2. As we have mentioned, Stable Diffusion can be run locally. And when run locally, it generates images free of charge. That's a big upside considering that DALL-E 2 currently runs on paid credits.

Also, Stable Diffusion/DreamStudio can generate images based on both textual and visual prompts—i.e., uploaded images. For example, DreamStudio generated these two images based on our text prompt and the image of Da Vinci’s Mona Lisa:

Overall, Stable Diffusion gives users more options than DALL-E 2, but the quality of the results is typically subpar.

How Will DALL-E 2 Impact The Graphic Design Industry?

As we saw, DALL-E 2 isn’t that different (or better) from some other AI image generators. Still, artists are mainly concerned about DALL-E 2 and not so much about other AI models. One reason why is that OpenAI publicly stated that users can sell their creations for profit, without limitations.

This is an obvious problem for graphic designers. Non-artists could now become their competitors, while businesses could start creating images for all purposes using AI instead of hiring real artists.

On top of that, AI may force graphic designers to lower their prices to stay competitive. As Aditya Ramesh, an OpenAI researcher who helped build DALL-E 2, put it, AI systems could "bring the cost [of producing art] to something very small."

Another part of the problem pertains to all AI models and not just DALL-E 2.

All AI image generators—or, rather, their neural networks—are currently trained on existing images. That’s how they are able to mimic the style of specific artists:

The problem is that users can now create images that look as if they were created by specific artists without getting the artists’ consent—and without paying them.

"We work for years on our portfolio. Now suddenly someone can produce tons of images with these generators and sign them with our name." — Greg Rutkowski (Source)

While users are already using AI to create images in the styles of their favorite artists, we have yet to see if AI will lead to graphic designers losing their jobs or having to lower their prices. Alternatively, graphic designers may start using DALL-E 2 "as a tool to support their creative process" and find new ways to capitalize on AI-generated images.

Should Graphic Designers Worry About AI Image Generators? Perhaps Not Yet

While some companies, like Cosmo, Heinz, and OctoSQL, jumped to take DALL-E 2 for a test drive, it does not seem likely that many will follow suit.

The technology is still pretty new, so many companies may be wary about using it—especially because doing so could open them to lawsuits. As several legal experts pointed out, the fact that OpenAI retains ownership over all images may give them grounds for future legal actions against users.

Also, DALL-E 2 and similar AI systems are pretty bad at spelling, which could limit their potential applications in business:

Lastly, AI systems like DALL-E 2 can't generate branded images—images with brand-specific colors, shapes, and styles—just yet. Many companies may still choose to work with human designers that can better understand their requirements.

There are other issues that need resolving before AI-generated images can be used on a larger scale. Companies behind AI systems still need to work on restricting unethical use, making their models less biased, and improving the systems' ability to create realistic images.

In the meantime, graphic designers may start using AI systems to enhance their work on their own projects. And perhaps that's exactly where the (near) future is headed.

Subscribe to get full access to the newsletter and never miss an update.

Here is also more about my experience in this space and the two books I’ve written on unsupervised learning and natural language processing.

You can also follow me on Twitter.