Reasoning Vs Non-Reasoning LLMs: Architectural Tradeoffs

Explore the architectural divide in modern AI: specialized reasoning engines vs. general-purpose models, and the emerging standards shaping their deployment.

Key Takeaways

MoE architectures like DeepSeek R1 offer significant efficiency gains over dense models.

Grok-3's "Big Brain" mode demonstrates the power of massive computational resources for complex reasoning.

Hybrid models like Claude 3.7 Sonnet provide flexible reasoning capabilities with controlled compute allocation.

Emerging deployment standards are defining clear use cases for reasoning vs. pattern-matching models.

The future of AI deployment lies in strategic combinations of specialized and general-purpose architectures.

In 2023, I started Multimodal, a Generative AI company that helps organizations automate complex, knowledge-based workflows using AI Agents. Check it out here.

Today, a clear divide is emerging between specialized reasoning engines and general-purpose hybrid models. Let’s dive into the architectural tradeoffs that define modern AI systems, exploring how different approaches tackle the challenges of scale, efficiency, and versatility.

Architectural Paradigms in Modern LLMs

Specialized Reasoning Engines

DeepSeek R1 (MoE Architecture)

DeepSeek R1 represents a paradigm shift in AI model design, leveraging a Mixture of Experts (MoE) architecture to achieve unprecedented efficiency. At its core, R1 activates only 37 billion of its 671 billion parameters per token, enabling sparse computation that dramatically reduces computational overhead. This approach allows R1 to maintain a massive knowledge base while operating with the agility of much smaller models.

R1's chain-of-thought reinforcement learning optimization incorporates self-correction mechanisms, enhancing its ability to reason through complex problems and refine its outputs iteratively. With a 64K context window and a dedicated 32K token budget for chain-of-thought reasoning, R1 can handle extended dialogues and multi-step problem-solving with ease.

Perhaps most notably, DeepSeek R1's MIT-licensed MoE implementation achieves an 82% reduction in floating-point operations (FLOPs) compared to dense models of similar scale. This efficiency gain translates directly to lower inference costs and faster response times, making R1 a compelling option for resource-conscious deployments.

Grok-3 ("Big Brain" Mode)

Elon Musk's xAI has taken a different approach with Grok-3, focusing on raw computational power and advanced reasoning capabilities. Grok-3's "Big Brain" mode harnesses a staggering 100,000 Nvidia H100 GPU cluster, enabling it to tackle computationally intensive tasks with unparalleled speed and depth.

At the heart of Grok-3's architecture is a multi-agent debate system that validates solutions through simulated discourse between AI agents. This approach mimics human problem-solving dynamics, allowing Grok-3 to explore multiple perspectives and arrive at more robust conclusions.

Grok-3's dynamic compute scaling adjusts processing resources on the fly, delivering responses in as little as 1-10 seconds for even the most complex queries. This flexibility ensures that users receive timely responses without sacrificing depth of analysis.

The integration of DeepSearch technology gives Grok-3 the ability to verify information in real-time by scanning the internet and X (formerly Twitter) for the latest data. This feature keeps Grok-3's knowledge base current and enhances its ability to provide accurate, up-to-date information.

General-Purpose Hybrid Models

Claude 3.7 Sonnet

Anthropic's Claude 3.7 Sonnet takes a hybrid approach, blending the versatility of general-purpose models with advanced reasoning capabilities. Built upon the foundation of Claude 3.5, Sonnet incorporates reinforcement learning from human feedback (RLHF) to refine its outputs and align more closely with human expectations.

A standout feature of Claude 3.7 is its token-controlled reasoning, allowing users to allocate between 0 and 128,000 tokens for "thinking" before generating a response. This granular control over the model's cognitive resources enables a balance between quick replies and deep, thoughtful analysis as needed.

Sonnet boasts a 200K token context window with modified self-attention mechanisms, allowing it to maintain coherence over extremely long conversations or documents. Despite its expanded capabilities, Claude 3.7 achieves a 37% faster throughput compared to GPT-4.5, highlighting Anthropic's focus on performance optimization.

GPT-4.5

OpenAI's GPT-4.5 represents the pinnacle of dense model architectures, featuring a massive 12.8 trillion parameter count. This sheer scale allows GPT-4.5 to capture intricate patterns and relationships across a vast range of topics and tasks.

A key focus in GPT-4.5's development has been enhancing its emotional intelligence, enabling more nuanced and empathetic interactions. This advancement positions GPT-4.5 as a powerful tool for applications requiring deep understanding of human sentiment and social dynamics.

With a 128K token context window, GPT-4.5 can handle extended conversations and analyze lengthy documents. However, this capability comes at a premium, with output costs reaching $150 per million tokens. This pricing structure reflects the significant computational resources required to operate such a large, dense model at scale.

Emerging Deployment Standards

As AI technologies mature, clear patterns are emerging in how different types of models are deployed for specific use cases. This section explores the emerging standards for deploying reasoning models, pattern-matching models, and hybrid approaches.

Reasoning Model Applications

Reasoning models excel in domains requiring complex, multi-step analysis and decision-making. Two key areas where reasoning models are becoming the standard are medical diagnosis and supply chain optimization.

Medical Diagnosis

In the medical field, reasoning models are help with diagnostic processes:

Multi-step differential diagnosis flows: These models can navigate through complex decision trees, considering a wide range of symptoms, test results, and patient history to arrive at accurate diagnoses.

Probabilistic reasoning over symptom clusters: By analyzing the likelihood of various conditions based on combinations of symptoms, these models can provide more nuanced and accurate diagnoses, especially for rare or complex cases.

Supply Chain Optimization

Reasoning models are also transforming supply chain management:

Constraint-based reasoning for routing: These models can optimize complex logistics networks by considering multiple constraints such as delivery times, vehicle capacities, and fuel efficiency.

Dynamic replanning with Monte Carlo Tree Search: This approach allows for real-time adjustments to supply chain strategies, adapting to unexpected events or changes in demand.

Pattern-Matching Dominance Areas

While reasoning models excel in complex decision-making, pattern-matching models remain superior in areas requiring rapid processing of large datasets and identification of subtle patterns.

Content Recommendations

In the realm of content recommendations, pattern-matching models continue to dominate:

Attention-based sequence modeling: These models excel at understanding user preferences by analyzing sequences of interactions, leading to more personalized recommendations.

Real-time collaborative filtering: By quickly identifying patterns across large user bases, these models can provide up-to-the-minute recommendations based on current trends and user behaviors.

Classification Tasks

For classification tasks, pattern-matching models offer unparalleled efficiency:

Fine-tuned LoRA adapters: These lightweight adaptations allow for rapid customization of large language models for specific classification tasks, improving both speed and accuracy.

Ensemble pattern detectors: By combining multiple specialized classifiers, these models can achieve high accuracy across a wide range of classification tasks.

Hybrid Deployment Strategies

As the lines between reasoning and pattern-matching blur, hybrid deployment strategies are emerging to leverage the strengths of both approaches.

Claude 3.7's Adaptive Compute Budgeting

Anthropic's Claude 3.7 introduces a novel approach to balancing quick responses with deep reasoning:

API-controlled thinking tokens: Users can allocate between $3 and $15 per million tokens for "thinking," allowing fine-grained control over the depth of reasoning applied to a given task.

Early exit mechanisms: For simpler queries, the model can provide quick responses without engaging in unnecessary deep reasoning, optimizing both speed and resource usage.

Grok-3's Mode Switching

xAI's Grok-3 takes a different approach to hybrid deployment:

Automatic Big Brain activation: The model can automatically switch to a more intensive reasoning mode for complex tasks, including:

Multi-variable calculus problems

Legal document analysis

Counterfactual reasoning scenarios

This adaptive approach ensures that the appropriate level of computational resources is applied based on the complexity of the task at hand.

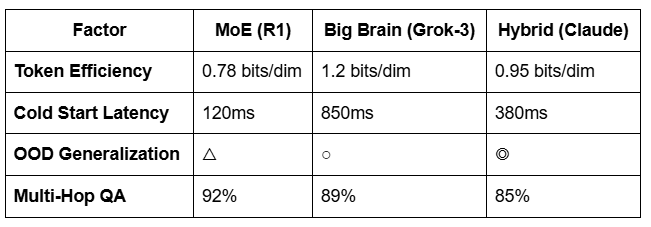

Architectural Tradeoff Matrix

I also host an AI podcast and content series called “Pioneers.” This series takes you on an enthralling journey into the minds of AI visionaries, founders, and CEOs who are at the forefront of innovation through AI in their organizations.

To learn more, please visit Pioneers on Beehiiv.

Wrapping Up

Each approach offers unique strengths: MoE architectures provide efficiency, while dense models offer versatility. Hybrid solutions are emerging to bridge this gap, offering adaptive compute and specialized modes.

As deployment standards crystallize, we see reasoning models excelling in complex decision-making tasks, while pattern-matching models dominate in rapid data processing. The future of AI lies in strategically leveraging these diverse architectures to optimize performance, cost-efficiency, and applicability across a wide range of industries and use cases.

I’ll come back next week with more on model choice and tradeoffs.

Until then,

Ankur.