LLaMA Derivatives: The Latest On Meta’s Open Source LLM

Read for a comparative overview and applications of various LLaMA derivatives, including a detailed Koala vs. Vicuna LLM comparison.

Key Takeaways

LLaMA’s release has inspired many developers and researchers to fine-tune the model using better datasets or slightly changing the architecture to achieve specific, quality results.

Some of LLaMA’s top derivatives include Koala LLM, Vicuna LLM, Stanford Alpaca, GPT4ALL, and Guanaco. Dalai and RedPajama are two other interesting iterations.

Koala is a derivative of Meta LLaMA developed by Berkeley Artificial Intelligence Research Lab at UC Berkeley.

The carefully-sourced data that Koala has been trained on includes high-quality answers to human-like questions, making it better at answering and feedback tasks. Koala 13B, which is the resulting LLM, is competitive with both open-source and closed LLMs on question-answering tasks.

Vicuna is another LLaMA derivative. It addresses the key shortcomings of LLMs like ChatGPT.

Vicuna differs from other LLaMA derivatives like Alpaca in its architecture. Vicuna developers have also incorporated memory optimizations and reduced training costs.

Koala is better at creativity than Vicuna, but Vicuna outperforms in terms of code generation and objective knowledge.

This post is sponsored by Multimodal, an NYC-based startup on a mission to make organizations more productive, effective, and competitive using generative AI.

Multimodal builds custom large language models for enterprises, enabling them to process documents instantly, automate manual workflows, and develop breakthrough products and services.

Visit their website for more information about transformative business AI.

In our last blog post in this series, we introduced you to LLaMA, an open-source large language model released by Meta AI to compete with Google and OpenAI. LLaMA is a state-of-the-art model capable of running on smaller devices with much less computing power. Since it is open-source, it also invites more creativity and gives smaller researchers the opportunity to create better versions and applications.

Due to this, we’ve already started seeing several promising fine-tuned versions of LLaMA floating around the Internet. All of these existing language models have unique training datasets and achieve the goal of training large language models at a fraction of the normal cost required.

Different Derivatives of Meta’s LLaMA

Since LLaMA is open source and Meta released its weights, it has inspired many developers and researchers to fine-tune the model using better datasets or slightly changing the architecture to achieve specific, quality results. Here are some of the top derivatives of LLaMA currently available:

Stanford Alpaca: Alpaca was one of the first LLaMA derivatives. It is a fine-tuned version of LLaMA 7B. Alpaca has been trained using 52K generations by text-davinci-003 to produce outputs that are comparable to those generated by OpenAI’s model.

GPT4ALL: This fine-tuned derivative of LLaMA is trained on 800,000 assistant responses and has produced 430,000 assistant-style interaction pairs. It is also available in a quantized 4-bit version.

Guanaco: Guanaco is an instruction-following language model fine-tuned off of the LLaMA 7B model. It has 534,530 more entries than the Alpaca dataset in seven languages.

Other Adaptations of LLaMA

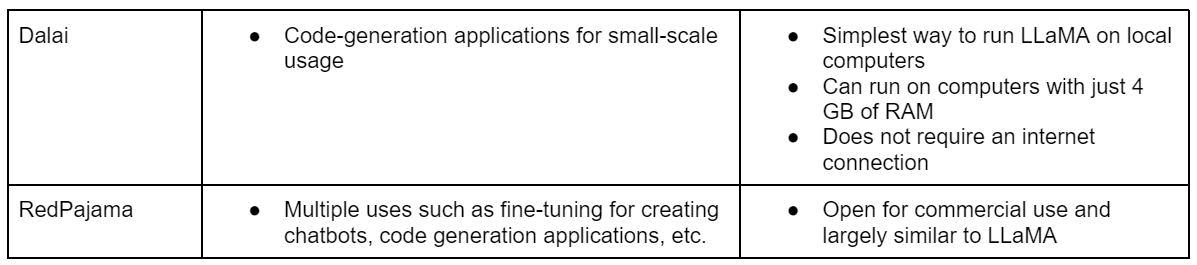

Dalai: Dalai is a way to run LLaMA on local machines. It needs just 4 GB of RAM and does not require an internet connection. Dalai requires Python <= 3.10 and Node.js >= 18.

RedPajama: RedPajama is not a LLaMA derivative. It is a replicated LLaMA dataset adapted for commercial use.

Apart from these, we also have Koala and Vicuna, two of the most capable LLaMA derivatives. We’ll give you a comparative overview of these two LLaMA-based language models below.

Koala: UC Berkeley’s Fine-Tuned LLaMA Derivative

Koala is a LLaMA derivative developed by Berkeley Artificial Intelligence Research Lab at UC Berkeley. It was announced in April 2023 shortly after LLaMA’s release. The researchers who developed Koala argue that it performs better than LLaMA because it has been trained on a small high-quality dataset.

Typically, open-source LLMs demonstrate subpar performance to closed ones because they’re trained on inferior datasets. For instance, whereas LLaMA has been trained exclusively on publicly available data, closed models such as OpenAI’s GPT are trained on thousands of paid blogs, research papers, and archives.

Koala’s results on various tests demonstrate that this shortcoming of smaller open-source models can be somewhat overcome by training them on high-quality datasets. Here’s a brief overview of Koala’s fine-tuning details, such as datasets used and performance achieved.

Training Dataset

Koala was trained on an NVIDIA DGX server with 8 A100 GPUs, requiring about 6 hours for training 2 epochs. Instead of using a large dataset, the Berkeley team focused on creating a small yet high-quality, human-rated dataset.

Berkeley researchers have trained Koala on the responses of ChatGPT and other similar models. According to the blog post announcing Koala:

“Koala is fine-tuned on freely available interaction data scraped from the web, but with a specific focus on data that includes interaction with highly capable closed-source models such as ChatGPT.”

Koala’s dialogue-based training data includes high-quality answers to human-like questions, making it better at answering and feedback tasks. Koala 13B, which is the resulting LLM, is competitive with both open-source and closed-source large language models on question-answering tasks. Below is a demonstration of how Koala performed compared to existing LLMs.

The main sources used datasets for Koala are the following:

ShareGPT: About 30K responses were taken from this dataset after removing non-English questions and deduplicating on the user-query level.

HC3: A total of 87K question-answer examples including 24K questions answered by both humans and ChatGPT were a part of this dataset.

OIG: The Open Instruction Generalist dataset curated by LAION, including the grade-school-math-instructions, the poetry-to-songs, and the plot-screenplay-books-dialogue worth 30K examples.

Stanford Alpaca: This dataset includes 52K examples generated by OpenAI’s text-davinci-003.

Anthropic HH: The dataset includes around 160K human-rated examples, providing both capabilities and additional safety protections for the model.

OpenAI WebGPT: Includes 20K comparisons where each example comprises a question, a pair of model answers, and metadata.

OpenAI Summarization: Includes approximately 93K examples, each consisting of human evaluation and feedback on the model-generated summarizations.

HC3, OIG, and Alpaca datasets are single-turn question answering while the ShareGPT dataset is high-quality dialogue data.

Other Koala Facts

Koala developers released EasyLM, the framework which was used to fine-tune the model. EasyLM is a customizable codebase that can be used to train other large language models in JAX.

Koala has some shortcomings, which include hallucination and generating incorrect responses with highly confident tones. It also lacks common sense and may generate harmful answers.

The cost of training Koala LLM is approximately around $100.

Koala suffers from the biases that its training datasets, especially dialogue sets have. The researchers have used OpenAI’s content moderation filter to flag unsafe content.

Aside from EasyLM, the developers have also released Koala model weights diff against LLaMA, an online interactive demo of the LLM, the code for preprocessing the training data, and the test set of queries.

Vicuna: Fine-Tuned LLaMA Derivative

Vicuna is another one of the most significant LLaMA derivatives. Developed by researchers at UC Berkeley, Stanford, Carnegie Mellon University, and UC San Diego, it addresses the key shortcomings of LLMs like ChatGPT. It has been fine-tuned primarily using the ShareGPT user responses.

Vicuna Architecture

The key way in which Vicuna differs from other LLaMA derivatives like Alpaca is in its architecture. Here’s a detailed overview of the main architectural aspects of Vicuna.

Memory optimizations: Compared to the context length of 512 in Alpaca, Vicuna has a 2048-token-long context which makes it better at understanding longer conversations. Using gradient checkpoint and flash attention, the researchers made sure the memory requirements of the GPU wouldn’t be enormous for Vicuna despite the added capability.

Multi-round conversations: Vicuna can carry out multi-round conversations because of the fine-tuning loss adjustments made to the model.

Cost reduction: Vicuna has a larger dataset and sequence length compared to Alpaca. This would otherwise make the model too expensive to train. However, SkyPilot managed spot with auto-recovery for preemptions cuts down costs. According to the paper:

“ This solution slashes costs for training the 7B model from $500 to around $140 and the 13B model from around $1K to $300.”

The above changes in Vicuna’s architecture guarantee better performance at an affordable rate for researchers and developers.

Other Vicuna Facts

Vicuna is capable of serving multiple models with distributed workers.

To judge Vicuna against other LLMs, the researchers employed GPT 4. This is one of the first instances of using an LLM to judge the efficacy of another LLM. The results indicate that Vicuna is better than Alpaca and LLaMA at question answering and is competitive with ChatGPT and BARD.

Vicuna is also prone to hallucinating. It is also not very good at math and reasoning tasks, performing identically to other LLMs in these tasks.

While the researchers have released the Vicuna weights, they will not release the fine-tuning dataset like Koala.

Koala Vs. Vicuna

A Reddit user recently used GPT-4 to compare Koala and Vicuna on different tasks. Here are the key results:

Koala is better at creativity than Vicuna, but only slightly so. GPT-4 gave Koala a creativity score of 8.5/10 as compared to Vicuna's 8/10. The models’ creativity was tested using a prompt asking them both to write stories.

Vicuna is slightly better than Koala in terms of objective knowledge. Koala got a score of 9/10 while Vicuna got 9.83/10.

Finally, at code generation, Vicuna beats Koala by a mile. Koala gets 4/10 while Vicuna gets 8.3/10.

Conclusion

Being open source, LLaMA has generated the potential for creating valuable LLMs at a significantly lower training cost compared to OpenAI and Google’s large language models.

Be it Alpaca, Koala, Vicuna, or any other LLaMA derivative, its ability to achieve state-of-the-art results on low-cost professional hardware shows how easy it is becoming to train and deploy these models.

While most of these derivatives are only legitimately available for research, LLaMA 2’s release will make commercial derivatives available soon. It remains to be seen how they will be deployed and fine-tuned for efficiency.