Large Action Models vs Large Language Models

Explore how Large Action Models (LAMs) are automating complex workflows in regulated industries, and why they're the future of AI-powered automation.

Key Takeaways

Large Action Models (LAMs) are the next frontier in artificial intelligence, combining language understanding with the ability to act on information.

LAMs can automate complex workflows in finance and insurance, reducing errors, saving time, and improving decision-making.

Compared to LLMs, LAMs offer greater autonomy, a wider range of capabilities, and deeper integration with existing systems.

Challenges of LAM adoption include data quality, security, and ethical considerations, requiring proactive solutions.

Despite challenges, the potential of LAMs is immense, paving the way for a more efficient, accurate, and innovative future of work.

Last year, I started Multimodal, a Generative AI company that helps organizations automate complex, knowledge-based workflows using AI Agents. Check it out here.

I have been working with large language models for years now. As part of Multimodal, our goal is to automate complex workflows in finance and insurance. LLMs, with their diverse capabilities, drive this intelligent automation. But over the past few months, I’ve been reading and working with large action models. They’re a game-changer for knowledge-based work and go a step beyond LLMs when it comes to actionability.

In this article, I’ll explore the business applications of a large action model over an LLM.

Manual workflows: the bane of regulated industries

Most regulated industries need help with the manual nature of complex workflows. For example, insurance experts still grapple with manually reviewing each claim, verifying information, and calculating payouts. It's a process ripe for error, delays, and frustrated customers.

In my last article about AI Agents, I emphasized how these LLMs grounded by think/act can intelligently automate these workflows and augment experts. Large Action Models, however, can do it much more efficiently.

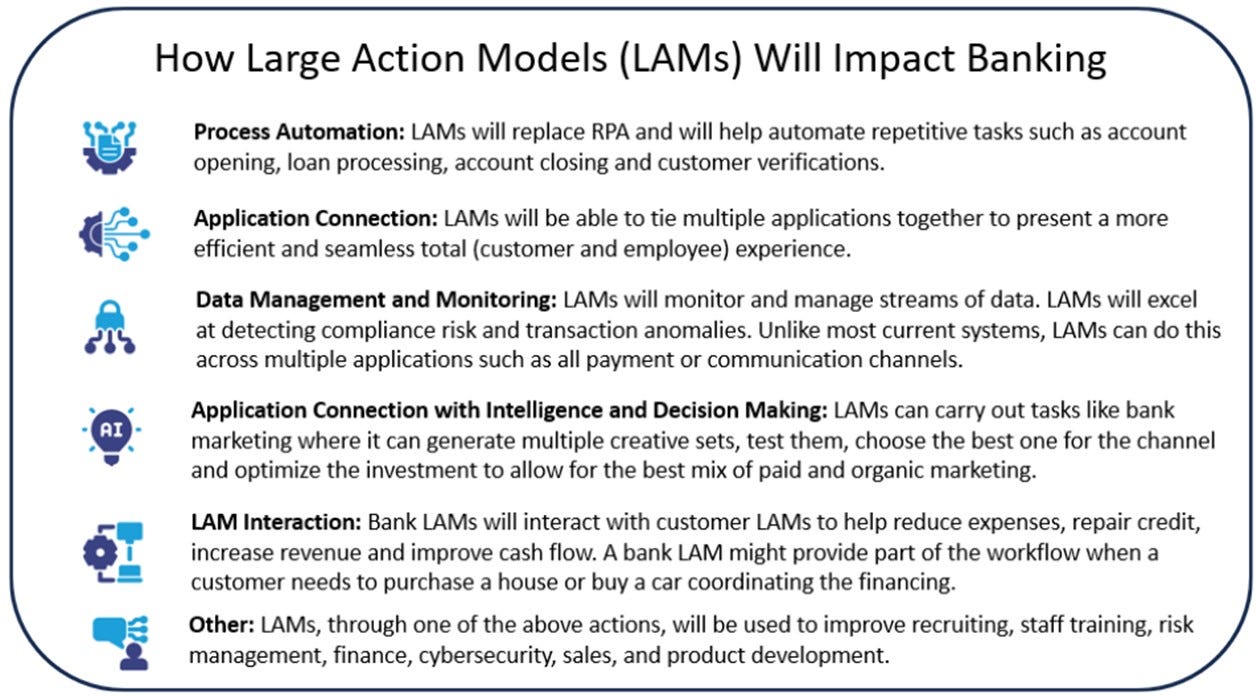

While LLMs like ChatGPT have impressed us with their conversational prowess, LAMs are built to do more than just chat. They're designed to navigate complex systems, make decisions, and execute actions with minimal human intervention. They make AI more autonomous.

I've seen firsthand how AI models are transforming businesses. We've worked with companies like Direct Mortgage to automate loan applications, cutting approval time by a factor of 20. We've even helped an insurance company streamline its underwriting process, achieving 95% accuracy and reducing processing time to under 15 seconds.

LAMs will do all this much better. Before we dive into the how and why, let’s talk about LLMs first.

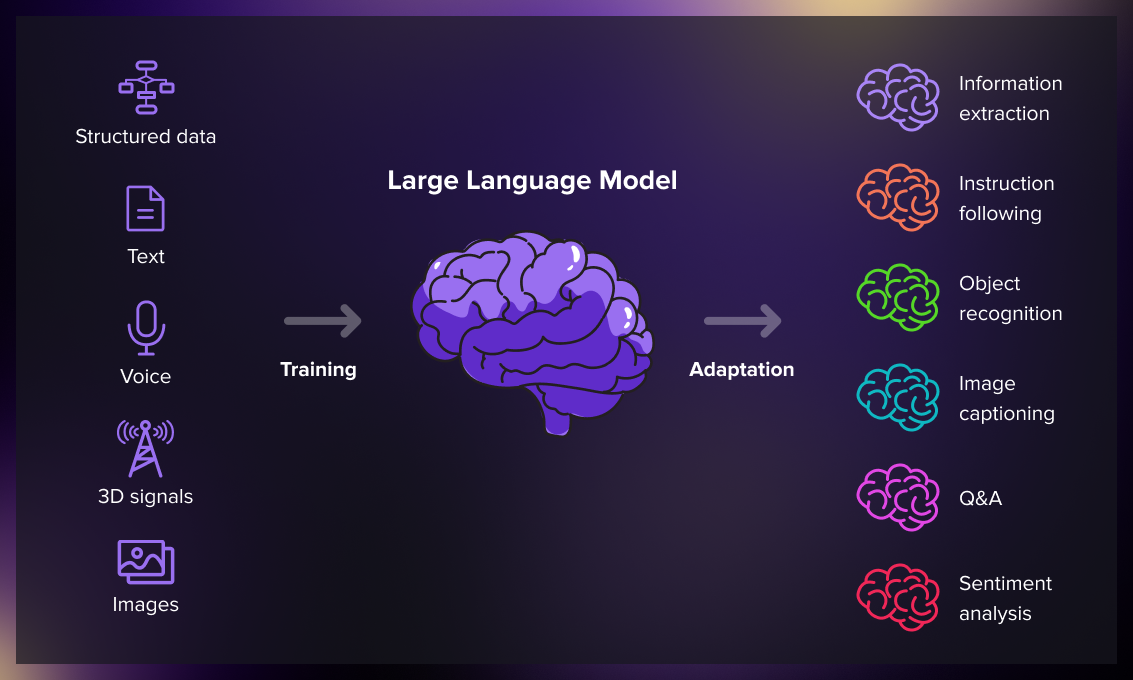

LLMs: How they work

Let's start with the basics. Large Language Models, or LLMs, are the AI powerhouses you've likely heard about – think ChatGPT, GPT-4, and their open-source counterparts. These models are trained on massive datasets of text and code, allowing them to understand and generate human-like language with impressive fluency.

They're the brains behind conversational AI, the engines of automated content creation, and the interpreters of vast amounts of unstructured data.

In finance and insurance, LLMs are already making waves. Morgan Stanley, for instance, employs an LLM-powered virtual assistant to field client questions, providing personalized financial advice at scale. JP Morgan Chase has harnessed LLMs to analyze complex legal documents, extracting key information for regulatory compliance.

And it's not just about using them out of the box. LLMs can be fine-tuned on specific tasks and datasets, becoming even more adept at understanding industry jargon, spotting patterns in financial data, or even generating code to automate specific processes.

However, while LLMs can perform some actions, it's not their forte. They're fundamentally designed for language processing, not for navigating the complex web of systems and APIs that power most business operations.

This means that LLM-based "action" systems often require extensive engineering and customization.

We work with LLMs to customize them and integrate them well into existing systems even right now. But with LAMs, we can do both customization and integration far more simply and deeply.

Building AI Agents with LAMs

While LLMs are designed primarily for text understanding and generation, LAMs are engineered to interact with external environments as they perform tasks. They can help build more sophisticated agentic workflows. They are essentially a combination of:

Large Language Models (LLMs): The foundation of large action models is typically a powerful LLM, providing the ability to understand the natural language interface and generate text responses. This could be any state-of-the-art model like GPT-4, PaLM 2, or an open-source alternative.

Action execution layer: LAMs are equipped with an action execution layer that allows them to translate their language understanding into concrete actions. This layer typically includes:

API wrappers: These interfaces enable the LAM with task execution by allowing it to interact with external APIs (e.g., Salesforce, Stripe, Google Sheets), allowing it to fetch data, update records, or trigger workflows.

Code generation: Some LAMs can dynamically generate code snippets to perform specific tasks, such as data transformations or calculations.

Tool integration: LAMs can be integrated with a variety of tools and platforms, such as web browsers, databases, or even robotic process automation (RPA) software, to automate a wider range of tasks.

Memory and state management: LAMs need to keep track of their actions and the state of the environment they are interacting with. This is often achieved through a combination of:

Short-term memory: A temporary store for recent information, such as the last few actions taken or the current state of a task.

Long-term memory: A persistent store of knowledge about the world, such as facts, procedures, or user preferences. This can be implemented using databases, knowledge graphs, or even the LLM's own internal memory mechanisms.

Planning and decision-making: To achieve their goals, LAMs often need to plan a sequence of actions and make decisions based on the available information. This can be done using:

Rule-based systems: A set of predefined rules that dictate how the LAM should behave in different situations.

Reinforcement learning: A technique where the LAM learns to make optimal decisions by receiving feedback (rewards or penalties) for its actions.

Decision transformers: A type of model that can learn to predict the best action to take based on the current state and the desired goal.

Key technical differences from LLMs

System integration: LAMs are built with integration in mind, allowing them to interact with a wide range of software and systems through APIs and other interfaces.

Memory and state management: LAMs require more sophisticated mechanisms for tracking their actions and the state of the environment they are operating.

Planning and decision-making: LAMs often employ advanced techniques like reinforcement learning or decision transformers to plan and execute complex tasks.

Whether it’s an LLM or a LAM, you need to build AI Agents out of them to apply them to the business context and harness their capabilities. Each has its strengths and weaknesses, so let's break it down.

A good example I can think of in the LAM space right now is Adept AI. However, this technology is so new that there are very few companies using it for agentic automation.

The advantages of LAM-based agents include:

Increased autonomy: These agents can operate with minimal human supervision, freeing up your team to focus on higher-value activities.

A broader range of tasks: LAMs can handle a wider variety of tasks than a large language model, from processing invoices to managing inventory. They're not limited to language-based interactions; they can interact with any system that has an API.

Deeper integration: LAMs can seamlessly integrate with your existing workflows, making them a natural extension of your team. This means less disruption and a smoother transition to AI-powered automation.

End-to-end workflow automation: This is where LAMs truly shine in complex industries. They can orchestrate entire processes, from data collection and analysis to decision-making and execution. This makes them ideal for tasks like loan origination, claims processing, or underwriting.

I also host an AI podcast and content series called “Pioneers.” This series takes you on an enthralling journey into the minds of AI visionaries, founders, and CEOs who are at the forefront of innovation through AI in their organizations.

To learn more, please visit Pioneers on Beehiiv.

Challenges of building AI Agents with LAMs

Generative AI is evolving fast, and right now, we know very little about the true possibilities of LAMs. I do see them automating knowledge-based work, especially through agent-based automation. They understand language and can complete tasks autonomously.

Their ability to perform human actions will help end-to-end workflows. But considering this technology is still in its early stages, there will be some challenges:

Data quality: Ensuring data accuracy, completeness, and bias-free representation is crucial for reliable decision-making. This often requires investing in robust data management and governance practices.

Security: With all of my clients, I get questions about data privacy and security. LAMs will still be prone to these issues. Ensuring privacy in regulated industries requires a multi-layered approach, including encryption, access controls, and regular security audits.

Ethical considerations: As LAMs are more autonomous, questions of bias, fairness, and accountability become increasingly important. Ensuring that AI agents are transparent, explainable, and aligned with ethical principles is essential. This involves careful algorithmic design and regular monitoring of model performance.

If you’re a CTO or a business leader looking to automate part of your business, you’re probably enticed by all that you’ve read about LAMs here. Here’s my advice before you start:

LAMs are still nascent, and while we’re waiting for them to develop, LLM-based AI Agents are amazing at complex work automation. The right AI partner will provide seamless integration, privacy, and ease of use even with LLMs.

Choose a small workflow so you can realize AI ROI faster. You can always replicate and expand, but it’s important to start small and right.

We’ll talk more about complex work automation in two weeks.

See you then,

Ankur.