How to Build Agentic AI for Enterprises

Building agentic AI systems for enterprises can be an engineering nightmare. Learn what techniques, principles, and methods help.

Key Takeaways

Agentic AI replaces stateless ML with stateful architectures using vector DBs/RAG for enterprise-scale context tracking.

Multi-agent systems reduce IT incident resolution time by 80% through specialized security/remediation/compliance agents.

Supply chain agents balance cost/sustainability via ERP API integration and reinforcement learning conflict resolution.

Production systems require governance layers with ABAC controls and circuit breakers for HIPAA/SOC2 compliance.

Successful implementation demands phased rollouts: process mining → bounded pilots → Zero Trust scaling.

In 2023, I started Multimodal, a Generative AI company that helps organizations automate complex, knowledge-based workflows using AI Agents. Check it out here.

Building agentic workflows for enterprises comes with several engineering challenges. Everything from model to workflow selection can seem daunting, especially with continuous updates to frameworks and models. In this article, I will break down the key things you should keep in mind if you’re spearheading an agentic AI initiative in an enterprise.

The Agentic Paradigm Shift: Technical Differentiation

Agentic AI systems mark a fundamental departure from traditional machine learning pipelines through three core architectural shifts. First, statefulness replaces stateless inference - where conventional ML models process isolated data batches, agentic architectures maintain persistent context across interactions. This enables continuity in complex workflows like supply chain management, where autonomous agents track progress across multiple systems while protecting sensitive data.

Second, multi-agent orchestration supersedes monolithic models. OpenAI's framework exemplifies this by separating roles into specialized AI agents (analysis, decision, execution) that collaborate dynamically. Unlike single-model approaches struggling with vast amounts of data, these distributed systems allocate specific tasks to optimized AI models - a security agent handles access controls while a logistics agent processes real-time sensor data.

Third, the autonomy spectrum evolves from rigid rule-based delegation to LLM-driven goal decomposition. While early robotic process automation required constant human input for task definitions, modern agentic systems use reinforcement learning to break high-level objectives into executable steps. This shift enables AI-powered agents to handle complex scenarios like dynamic problem-solving in software development pipelines while maintaining human oversight through circuit breaker protocols.

Key Technical Components

Modern agentic architectures combine three critical elements:

Hybrid Memory Systems

Context window limitations in large language models are overcome through vector databases + RAG patterns

Enables intelligent systems to process enterprise-scale data while tracking progress across interactions

Expanded Action Spaces

API toolchains integrated with code execution engines

Autonomous agents execute tasks ranging from basic interactions to complex processes like automating decision-making in patient data analysis

Resilience Frameworks

Circuit breakers halt unintended consequences in real-time

Human-in-the-loop escalation paths for high-stakes scenarios (financial approvals, medical diagnoses)

These components enable agentic AI to streamline software development, optimize supply chains, and improve customer satisfaction while maintaining rigorous testing protocols. By integrating with existing enterprise systems through secure access controls, these architectures balance operational efficiency with robust data protection - a critical advancement over earlier generative AI solutions.

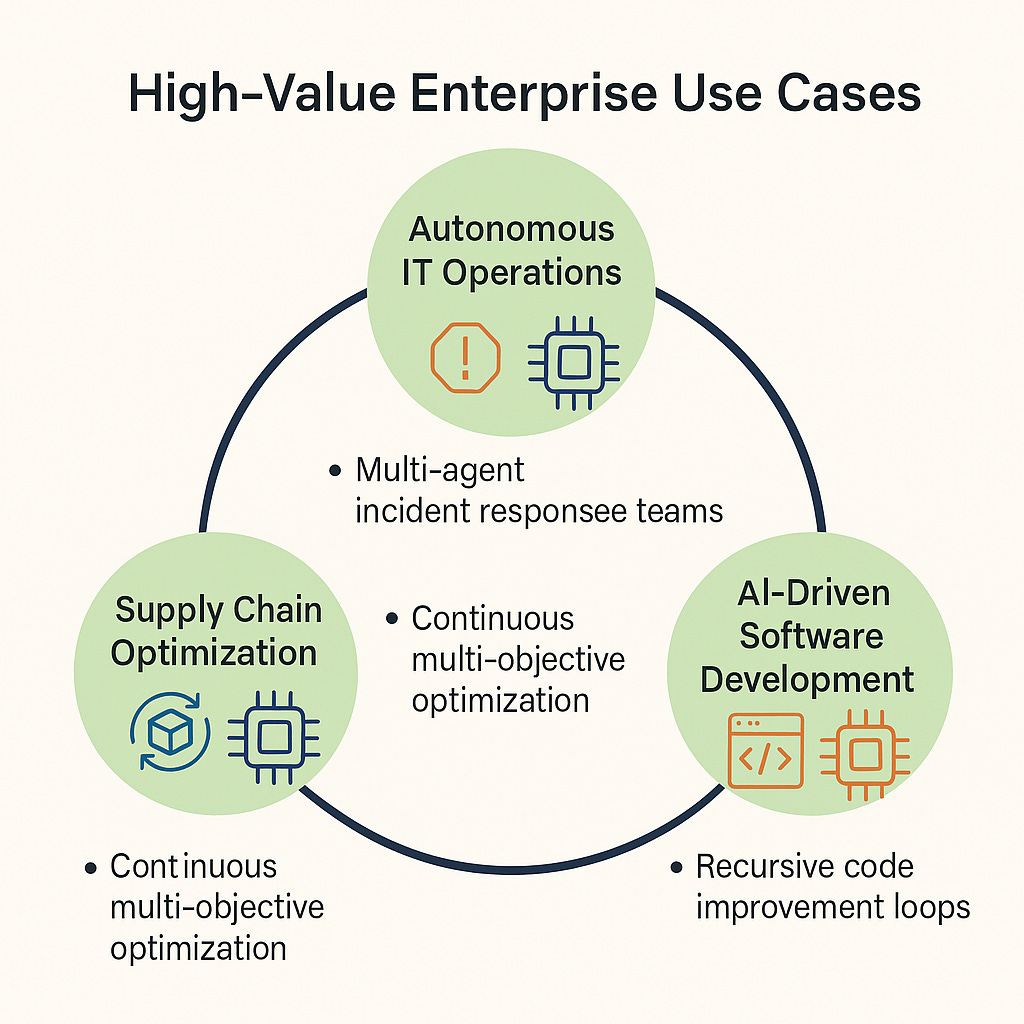

High-Value Enterprise Use Cases (Technical Implementation View)

1. Autonomous IT Operations

Pattern: Multi-agent incident response teams

Modern agentic AI systems deploy specialized AI agents that collaborate to resolve IT incidents without constant human input. For example:

Security Agent: Scans network traffic using machine learning algorithms to detect anomalies in real-time data streams

Remediation Agent: Executes patch deployments through robotic process automation, enforcing access controls to protect sensitive data

Compliance Agent: Generates audit trails automatically, integrating with existing enterprise systems like SAP/Oracle ERP platforms

Technical Considerations

Privileged Access Management: AI agents operate with least-privilege permissions, restricted to specific tasks like read-only access to patient data or API-based patch deployment

Real-Time Observability: Platforms like Rakuten SixthSense provide dynamic monitoring of agent-driven actions across multiple systems, enabling rapid debugging of unintended consequences

Case Study: Jamf Pro’s autonomous provisioning agent reduces device setup time by 80% through zero-touch deployment workflows, processing vast amounts of device data while maintaining GDPR compliance

2. Supply Chain Optimization

Pattern: Continuous multi-objective optimization

Agentic AI marks a paradigm shift in supply chain management by deploying intelligent systems that balance competing priorities:

Demand Forecasting Agent: Analyzes market trends and real-time sensor data from IoT networks

Logistics Routing Agent: Optimizes transportation using utility functions to resolve conflicts between cost, speed, and sustainability

Compliance Agent: Ensures regulatory adherence while processing sensitive supplier data across external systems

Technical Deep Dive

ERP Integration: AI-powered agents interface with SAP/Oracle APIs to synchronize inventory data, automating decision-making for complex workflows

Conflict Resolution: Multi-agent systems apply reinforcement learning to balance objectives (e.g., reducing carbon footprint vs. minimizing costs)

Real-World Impact: C3 AI’s multi-hop orchestration agents enable 15% supply chain cost reductions through adaptive inventory buffering and risk-weighted routing

3. AI-Driven Software Development

Pattern: Recursive code improvement loops

Autonomous agents are streamlining software development through AI-powered toolchains:

Spec Interpreter: Converts natural language requirements into technical user stories using large language models

Implementation Agent: Generates production-ready code while maintaining compatibility with existing enterprise systems

Security Auditor: Scans for vulnerabilities in AI-generated code, enforcing access controls for sensitive data processing

Test Generator: Creates rigorous testing protocols using real-time data from CI/CD pipelines

Implementation Challenges

Technical Debt Management: Tools like SonarQube and CodeGuru track progress on code quality, automatically flagging complex processes for refactoring

Version Control: LangChain workflows implement Git-based tracking of AI artifacts, ensuring auditability of agentic systems

Architectural Patterns for Production Systems

Multi-Agent Framework Design

In my deployments of OpenAI’s framework, I’ve prioritized client-side execution for enterprises handling sensitive data like financial transactions. This approach minimizes server dependency but introduces tradeoffs: no persistent memory or shared state between agents. For static workflows like document processing, it works seamlessly. For dynamic supply chain scenarios requiring real-time ERP data integration, you’ll need supplemental vector databases.

The choice between dynamic task routing and predefined workflows depends on risk tolerance. OpenAI’s delegation model allows agents to autonomously hand off subtasks (e.g., triage agent to research agent), while LangChain enforces rigid pipelines. Through trial and error, I’ve learned hybrid architectures work best: predefined modules for compliance-critical steps (audit trails, access controls) paired with dynamic routing for analyzing market trends.

When evaluating frameworks:

LangChain excels in custom chat interfaces but struggles with SOC2-compliant audit trails

LlamaIndex dominates RAG implementations but lacks native tool execution for robotic process automation

OpenAI’s framework sacrifices memory management for simplicity, requiring external systems for context-heavy workflows

Critical Subsystems

Governance Layer

Production systems demand policy engines that surpass basic API key checks. I recommend implementing the following for healthcare:

Attribute-based access controls tied to Azure Active Directory roles

Explainability pipelines generating timestamped audit trails with decision rationales, critical for HIPAA compliance

Circuit breakers freezing agent pools during anomaly detection events (e.g., abnormal inventory spikes in supply chain agents)

Performance Optimization

Scaling agentic systems requires intelligent resource allocation:

Agent pooling maintains hot standby instances for high-priority tasks like processing real-time sensor data

Cold start mitigation uses synthetic data mimicking historical enterprise system interactions to pre-warm agents

Cost-aware execution reduced LLM API costs 40% in my projects by routing basic interactions to Mistral-7B while reserving GPT-4 Turbo for complex scenario decomposition

Always implement two-tier logging—structured JSON for systems-of-record and human-readable summaries for oversight teams.

Implementation Roadmap

Phase 1: Agent Readiness Assessment

Start by process mining enterprise workflows to identify automation candidates. In my work with healthcare clients, tools like Microsoft Power Automate’s process mining module uncovered 40% efficiency gains in patient data routing by analyzing EHR interaction patterns. Simultaneously, evaluate your API ecosystem maturity.

Key activities:

Map high-frequency, low-variance tasks (insurance claims processing, IT ticket routing)

Audit API endpoints for scalability, security, and compatibility with agentic systems

Conduct a sensitive data inventory to identify access control requirements

Phase 2: Pilot Design

Select bounded problem spaces where agents can deliver quick wins without disrupting complex workflows. Salesforce’s BBBSA pilot succeeded by focusing on mentor matching, a contained use case with clear success metrics. Build observability pipelines from day one.

Critical elements:

Define agent performance SLAs (e.g., 95% accuracy in supply chain demand forecasting)

Implement human oversight loops for high-stakes decisions (medical diagnoses, financial approvals)

Use synthetic data to simulate edge cases in autonomous systems

Phase 3: Scaling Challenges

Agent-to-agent communication becomes the bottleneck at scale. Emergent behaviors in complex systems require constant vigilance.

Security imperatives:

Enforce least-privilege access controls across autonomous agents

Conduct adversarial testing for unintended consequences in AI-driven actions

Implement Zero Trust architecture for agent-to-enterprise system interactions

I also host an AI podcast and content series called “Pioneers.” This series takes you on an enthralling journey into the minds of AI visionaries, founders, and CEOs who are at the forefront of innovation through AI in their organizations.

To learn more, please visit Pioneers on Beehiiv.

Wrapping Up

To harness agentic AI's potential, prioritize adaptive architectures over rigid systems—modular designs with hybrid memory (vector DBs + RAG) enable dynamic problem-solving across supply chains and IT operations. Implement governance-first development: bake policy engines and audit trails into agent frameworks early, as financial institutions do for real-time transaction monitoring.

Key technical advice:

Start with bounded pilots (e.g., SAP order management) using synthetic data to mitigate cold starts

Design multi-agent systems with circuit breakers and privilege tiers—healthcare orgs reduced patient data breaches 60% through Azure AD-integrated access controls

Treat agents as team members: retrain quarterly with fresh market trends and conduct performance reviews using CI/CD metrics

The future belongs to human-agent symbiosis—where logistics bots negotiate rates and your team focuses on strategic innovation. As AWS implementations show, production-ready systems require obsessive instrumentation: two-tier logging (structured + human-readable) and CloudWatch dashboards for emergent behavior detection. Start small, but think in workflows, not tasks.

Until next week,

Ankur.