GPT-4, GPT-3, and GPT-3.5 Turbo: A Review Of OpenAI's Large Language Models

A rundown of the features of OpenAI's newest Large Language Model and a comparison of capabilities from previous GPTs.

Key Takeaways

OpenAI released GPT-4 through a paid subscription on March 13, 2023. GPT-4 improves on the shortcomings of GPT-3 Davinci and on GPT-3.5 Turbo and its associated application, ChatGPT.

GPT-4 is capable of passing state bar exams in the 90th percentile, achieving multimodality and generating text with fewer errors than GPT-3 or GPT-3.5 Turbo.

OpenAI has stated that GPT-4 has the capacity to analyze any image given to it and generate a text summary of said image. However, OpenAI has not yet released this image summarization feature.

GPT-4’s max token span of 8k (and soon 32k) improves on GPT-3.5 Turbo’s 4k and GPT-3 Davinci’s 2k. Though GPT-4 still struggles with AI hallucination, the increase in max token span opens the potential for longer-form content such as articles, chapters, and even books.

GPT-4 and GPT-3.5 Turbo utilize an optimization technique known as reinforcement learning from human feedback (RLHF). This improvement allows the models to perform more accurately than GPT-3 Davinci, which was not trained using RLHF.

GPT-4 maintains OpenAI’s competitiveness in the field of generative AI, performing better than Google’s 2023 release of Bard and Microsoft’s 2023 release of its AI-powered Bing assistant.

This post is sponsored by Multimodal, a NYC-based development shop that focuses on building custom natural language processing solutions for product teams using large language models (LLMs).

With Multimodal, you will reduce your time-to-market for introducing NLP in your product. Projects take as little as 3 months from start to finish and cost less than 50% of newly formed NLP teams, without any of the hassle. Contact them to learn more.

GPT-4 aced the Bar Exam.

GPT-4 scored higher than most law students on the American bar exam. This milestone in artificial intelligence illustrates the rapid advances in Natural Language Processing (NLP) that have come out of OpenAI’s new release of GPT-4 this March. GPT-4 is an improvement on the wildly popular generative Large Language Model (LLM) GPT-3.5 Turbo, made widespread by OpenAI's browser application ChatGPT.

GPT-4 builds off of what OpenAI learned after publicly releasing a research preview of ChatGPT, a chatbot-like application based on GPT-3.5 Turbo. But how do the improvements of GPT-4 compare to other iterations of OpenAI’s Large Language Models, GPT-3 Davinci and GPT 3.5 Turbo?

GPT-3

Generative Pre-Training-3, or GPT-3, builds off of OpenAI’s AI language model released in 2019, GPT-2. Like its predecessor, GPT-3 is a large language model that can produce strings of complex language when prompted through natural language.

Importantly, 2020’s release of GPT-3 was trained on 175 billion parameters. GPT-3 was a landmark achievement in the capabilities of a Large Language Model. 60% of data used in GPT-3’s model training was scraped from Common Crawl, a dataset that at the time of GPT-3’s release encompassed 2.6 billion stored web pages. The sheer size of the training data and parameters led to accurate performance leaps above GPT-2.

There are multiple release versions of GPT-3, but in this article, we will reference the GPT-3 Davinci stable release.

Capabilities

In 2020, GPT-3 was the best generative model in existence. It outperformed all comparative models at the time, such as Google’s then-popular BERT.

GPT-3 brought an array of new possibilities to the table. Unlike BERT, GPT-3 could not only understand and analyze text but also generate it from scratch — be it an answer to a question, a poem, or a blog post heading.

The model also demonstrated notable improvements in terms of few-shot learning. Its ability to perform tasks with very little relevant training data was unmatched at the time. This meant that, unlike previous models, GPT-3 could perform reasonably well on tasks it has seen only a few times during training.

GPT-3's larger scale, unsupervised learning, few-shot learning, and generative capabilities made it a more powerful language model than BERT, particularly for tasks that involve generating new text. The model can produce lengthy, and relatively error-free, text from a natural language prompt. In fact, GPT-3 created articles when prompted that journalist testers had difficulty distinguishing from human-written articles.

Limitations

One problem with GPT-3 is AI hallucination, or when the model generates text that is not based on real-world knowledge or facts. This can happen when the model is presented with incomplete or ambiguous information or when it is asked to generate text about topics that it has not been trained on.

For example, if GPT-3 is asked to generate text about a hypothetical scenario that involves advanced technology that does not exist yet, it may produce text that includes details that are not possible or accurate. Similarly, if the model is asked to generate text about a complex scientific concept that it has not been trained on, it may confidently produce text that is inaccurate or misleading.

However, GPT-3 did markedly improve over GPT-2 which was much more prone to hallucinations.

Another limitation of GPT-3 is its lack of reasoning. The model relies on statistical patterns in the text it has been trained on and does not have a deep understanding of the world or the context in which the AI language model itself is used. This can lead to the model generating text that is technically correct but does not make sense in the broader context.

Finally, GPT-3 is trained on vast amounts of text data, which can reflect the biases and prejudices of the people who wrote it. If the training data is biased in some way, the model may learn and reproduce those biases in the text it generates.

The algorithms used to train GPT-3 may also be biased if they reflect the biases and assumptions of the people who designed them. For example, the algorithms may prioritize certain types of language or ideas over others, which can result in biased text generation.

Applications

Multiple applications are based on the GPT-3 model:

Chatbots: GPT-3 is being used to create more advanced chatbots that can understand natural language queries and respond in a more human-like way. These chatbots are being used for customer service, technical support, and other applications where users need assistance. A startup known as AskBrian utilizes AI to aid business professionals and consultants with problems related to business processes and management.

Content Creation: GPT-3 is also being used to generate content, such as news articles, product descriptions, and even fiction. These applications can save time and money for companies that need to produce a lot of content quickly like Marketing or Content Creators. The tool known as Jasper can build lengthy marketing copy, blog posts, and emails, shortening the workload for businesses.

Code Completion: OpenAI and Github have partnered to develop Github Copilot. Copilot is a built-in cloud-based assistant for programmers in Visual Studio. It helps developers who use Visual Studio Code, Visual Studio, Neovim, and JetBrains integrated development environments (IDEs) by automatically completing code. The tool was announced by GitHub on June 29, 2021 and is currently only available to individual developers through a subscription. It is most effective when used for coding in Python, JavaScript, TypeScript, Ruby, and Go.

GPT-3.5 Turbo (Model Behind ChatGPT)

GPT-3.5 Turbo, released on March 1st, 2023, is an improved version of GPT 3.5 and GPT-3 Davinci.

OpenAI utilized a development technique known as Reinforcement Learning from Human Feedback (RLHF) when developing GPT-3.5 Turbo. This method of model training involves human feedback ‘rating’ a large language model’s performance. GPT-3.5 is a more robust model with more accurate and policy-optimized responses due to the heavy employment of RLHF in development.

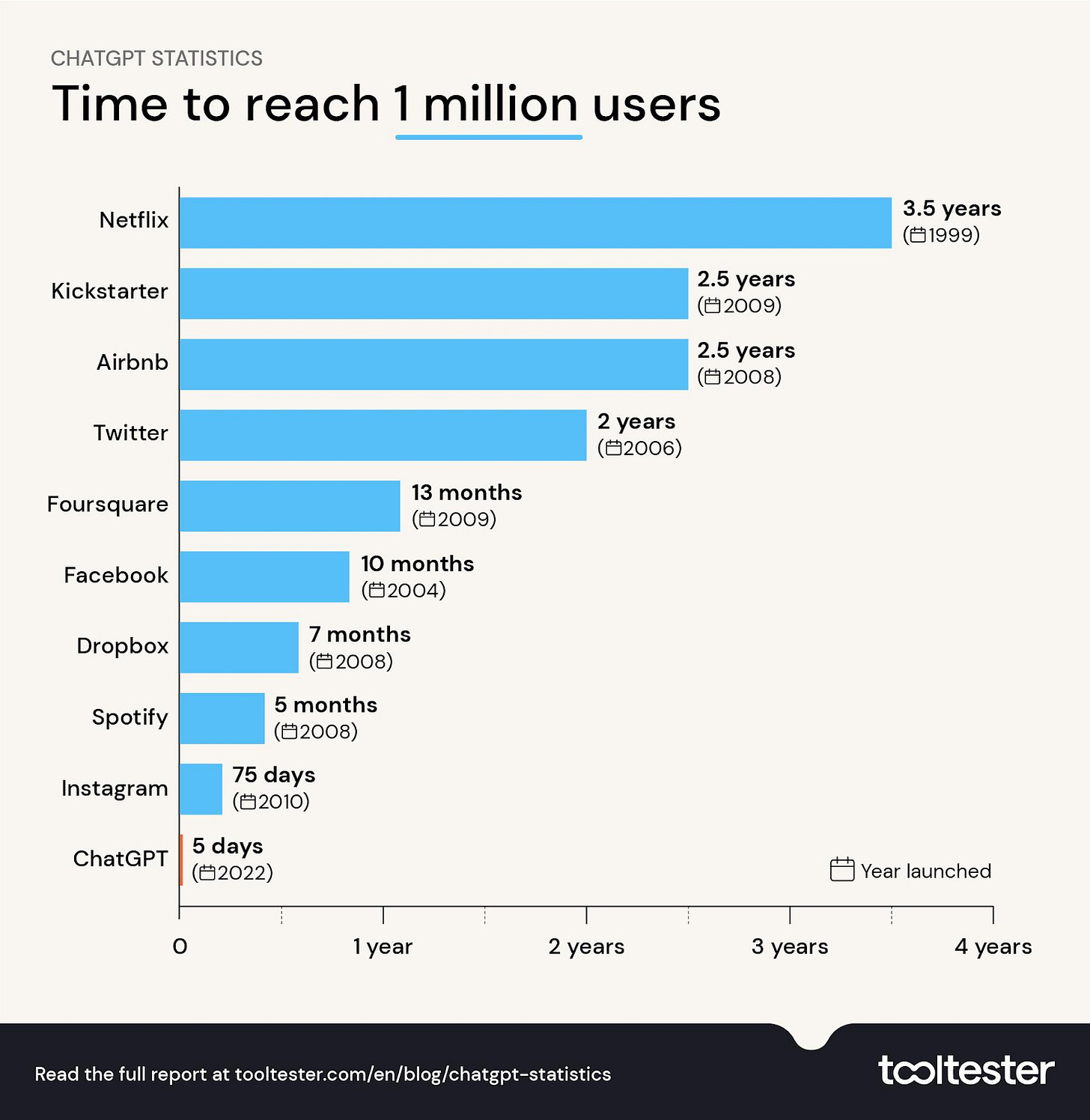

ChatGPT, a web-browser application based off of a model from the GPT-3.5 series, was released on November 30th, 2022 and quickly outpaced all other tech products in terms of user adoption. In only 5 days, ChatGPT reached 1 million users.

Capabilities

GPT-3.5 Turbo provides many of the same capabilities as GPT-3. However, GPT-3.5 Turbo proved to be capable of answering much more versatile questions and acting on a wider range of commands. GPT-3.5 Turbo is also less likely to have hallucinatory responses.

GPT 3.5 Turbo is the model behind the popular ChatGPT application.

The most important benefit of ChatGPT is that it is browser-based, user-friendly, and free to use. This is a huge benefit for businesses and individuals who want to use AI but perhaps did not have the technical knowledge or resources to work with it in the past.

This ease of access to ChatGPT allowed for a much wider range of questions to be asked into a system that is constantly improved with OpenAI’s updates. Mass user testing allowed for more bug reports and reports on system errors.

ChatGPT can be used by enterprises to write marketing copy, draft emails, and translate languages. ChatGPT is modular and free and thus can be cheaply integrated into other software applications, making it a more flexible option for developers who want to incorporate language generation into their own products or services.

Limitations

GPT-3.5 Turbo is available to a much wider audience than GPT-3, due to it being available on the free browser app, ChatGPT. This created the ability for a much larger group of people to study and push the systems’ boundaries.

Like GPT-3, GPT-3.5 Turbo can hallucinate, confidently saying incorrect information.

Regarding toxic language, GPT-3.5 Turbo has been designed to provide responses while adhering to ethical standards of language use. However, GPT 3.5 Turbo and ChatGPT are only as good as the data they have been trained on, and there is a constant risk (like with GPT-3) that the language it generates may contain inappropriate or toxic language, depending on the source of the training data.

GPT-3.5 Turbo also has very limited knowledge of events past 2021, as the bulk of its training data comes from pre-2021. This limitation also applies to topics that are evolving rapidly or undergoing significant changes, such as technology, politics, and society.

Due to ChatGPT being wildly popular, free, and hosted non-natively, enterprises can find that ChatGPT or ChatGPT-based applications can be slower, unresponsive, and sometimes unreliable based on user demand.

A method of circumventing this problem lies in ChatGPT’s Premium feature. OpenAI claims that ChatGPT Plus has much lower latency (and as of March of 2023, access to GPT-4). But as of writing this article the option to purchase a monthly subscription for 20 dollars is unavailable due to overwhelming demand.

Applications

Due to the freemium nature of ChatGPT, many organizations have begun building on top of it. Enterprises have found it a useful application with GPT-3.5 Turbo. Organizations use the model for many of the same tasks that GPT-3 has been utilized, such as copywriting, email writing, and web development.

Some of the currents enterprises utilizing GPT-3.5 Turbo and ChatGPT in their applications are:

Microsoft has integrated ChatGPT into its Power Virtual Agents platform, which allows businesses to create chatbots to automate customer service interactions.

Adobe has integrated ChatGPT into its Experience Cloud platform, which allows businesses to create conversational AI applications for customer engagement and support.

Hugging Face offers an API for accessing ChatGPT, which developers can use to create chatbots, virtual assistants, and other conversational AI applications.

Discord has integrated ChatGPT into its platform to provide automated moderation and chat filtering.

GPT-4

OpenAI released GPT-4 to improve upon the user experience data gained from the free mass release of ChatGPT. The company continues to improve its model in a way that satisfies growing demand.

GPT-4 is available through the developer waitlist and ChatGPT Plus. Though OpenAI has now paused the subscription sign-up for ChatGPT Plus, due to overwhelming demand.

Capabilities

GPT-4 has many of the same use cases as GPT-3 and ChatGPT, in generative language and text summarization.

However, GPT-4 is in some fields, much more accurate in its responses than GPT-3 and GPT -3.5 Turbo. For example, GPT-4 proved to be capable of passing the Bar Exam with flying colors.

In comparison, ChatGPT could only achieve slightly better than a 50% average on the Bar Exam, while GPT-3 Davinci did not even pass. GPT-4 is now outperforming the student average.

Besides being very talented at the bar exam, GPT-4 has marked improvements and capacities that GPT-3 and 3.5 Turbo does not.

Longer Context: GPT-4 can handle longer context than GPT-3, with up to 25,000 words in the input. This can result in more accurate answers.

Token Length: GPT-4 has a larger max token window, with an 8k span, almost doubling GPT-3.5’s max token span of 4,096 and quadrupling GPT-3’s 2,049. GPT-4 utilizes this increased token span to build more accurate and longer answers, potentially leading to the automated creation of entire articles and book chapters.

Coding: GPT-4, like GPT-3, can create complex and usable code. However, GPT-4 has a better capacity for complex code generation and can build working websites, games, and task-oriented code with natural language prompts. It can also completer existing code snippets.

Computer Vision: GPT-4 has also been teased to bring more to the image-recognition sphere of artificial intelligence development, with OpenAI stating that GPT-4 has the capacity to analyze a given image and write “paragraphs of text” in an accurate description of that image.

Multimodality: GPT-4 has ‘multimodality’, or the capacity to utilize multiple forms of sensory perception. For example, the analysis of an input image can be used by GPT-4 to explain another input text. GPT-4 can use those multimodal literacies in tandem to approach problems.

RLHF: Like GPT-3.5 Turbo, OpenAI’s utilization of RLHF in development of GPT-4 created improvements in robustness and policy optimization. One of the reasons for the vast leaps in ‘intelligence” of GPT-4 was the costly investment in human feedback. RLHF is an expensive and time-consuming technique for model training, and OpenAI invested heavily in the development of GPT-4.

Safeguards: Another point of improvement for GPT-4 is the prevention of ‘workarounds’ for censors on ChatGPT and GPT-3’s toxicity controls and terms of service. Previously, users could feasibly circumvent such safeguards by creating clever prompts to get ChatGPT to give a nefarious answer. GPT-4 is more capable of controlling itself in the defense against such attacks. According to OpenAI “GPT-4 is 82% less likely to respond to requests for disallowed content and 40% more likely to produce factual responses than GPT-3.5 on our internal evaluations.”

In terms of business use, GPT-4 is more capable of complex business writing than GPT-3.5 Turbo and GPT-3, which makes it a better, more productive bet than its predecessor services. For such tasks as copywriting or email writing, GPT-4 provides more accurate responses, making less work for the enterprise.

Limitations

GPT-4 appears to still suffer from the same lack of common sense that GPT-3.5 Turbo and ChatGPT do. For example, GPT-4 is exemplary in some areas, like passing the bar and the LSAT, but a dilettante in others, such as geographical knowledge.

Importantly, GPT-4 is behind a paywall for most enterprises, as its only current stable state is behind ChatGPT Plus’s subscription service. Other than that, for application development, OpenAI is dealing with individual businesses extremely exclusively in order to give out access to GPT-4 under the hood.

In breaking with its own tradition, OpenAI has allowed less access to GPT-4’s technical details than its previous iterations of the technology. This makes it much more complex tasks difficult to utilize GPT-4 for application development for an enterprise. As well, the decision to withhold information has made it more difficult to understand the improvements done on GPT-4 and utilize those same improvements for business purposes.

GPT-4 has a longer inference time than earlier GPT models. Hence although more accurate and capable, the model is slower than GPT 3.5 Turbo and GPT-3.

Applications

Khan Academy is using GPT-4 to make a tutoring chatbot, under the name “Khanmigo”. Mass public access to the chatbot is limited, and users are selected from a waitlist. After acceptance to that waitlist, a fee of $20 per month is required to use the technology. The chatbot will be available for free to students and teachers from 500 school districts who have partnered with Khan Academy.

Duolingo has added GPT-4 to its application and introduced two new features, "Roleplay" and "Explain My Answer". This feature is currently only available to English speakers who are learning French or Spanish.

Microsoft has confirmed that versions of Bing that already use a GPT model have been utilizing GPT-4 before it was officially released. On March 16, Microsoft announced that it will further integrate GPT-4 into its products, including Microsoft 365 Copilot, which will be embedded in apps like Word, Excel, PowerPoint, Outlook, Teams, and more.

Which GPT Language Model is right for you?

GPT-3, GPT-3.5 Turbo, and GPT-4 have distinct model parameters, max token span, and pricing.

While GPT-4 is the latest version of GPT and appears to be the most advanced LLM currently available, its release is limited in such a way that gaining access to GPT-4 for business purposes might seem like a far stretch for any mid-size or even large-size corporation without the resources or social capital to gain entry into OpenAI’s small release. GPT-4 is a mid-range per-token pricing option.

GPT-3 Davinci is a great option for those looking to build using LLM technology, especially for those that lack the resources to build an in-house LLM. The lack of latency and internet browser API for ChatGPT and the widespread availability of GPT-3 make it a great option for developers using LLMs.

GPT-3.5 is also useful, especially so for individuals and business professionals looking to work with the ChatGPT app and utilize LLMs without building them. Writing emails or generating copy can be done cheaper and easier than ever before with ChatGPT’s accessible and free browser application. GPT-3.5 Turbo is the lowest price option for those wishing to build off it, with a price per-token roughly 1/10th the cost of GPT-3 Davinci.

Conclusion

With the introduction of GPT-4 and the greater secrecy surrounding its internal makings, OpenAI has cemented itself as a dominant corporate player in the field of generative AI. Google’s Bard announcement, Microsoft’s Bing, and Baidu’s Ernie have all made waves in the field of generative AI, but OpenAI is the market leader.

Open source competitors such as GPT-Neo and GPT-J have also proven useful alternatives for organizations wishing to utilize AI.

The leaps in testing for GPT-4, beating human candidates in the bar and other exams, image description, safeguards for ethical content, and multimodality illustrate that GPT-4 is the most versatile model yet.

The overall industry is in a competitive race to build better, faster products, and it will be fascinating to see it unfold in the coming weeks.